- Slides

- Questions

- On the reproducibility

- On the Models

- Tool Versatility

- Accurate Modeling of Computations

- SimGrid in Teaching Settings

- High Performance Simulation and Hardware Accelerators

- Very Long Term Simulations

- Scalability in Applicative Workload

- Practical Efficiency of Model Checking

- Energy Considerations

- Methodology Convergence

- Photos

Today is a great day! I finally defended my habilitation thesis!

This diploma allows you to advise PhD students on your own, and you also need it to apply to full professor positions. My manuscript that is 100 pages long, just like my first thesis (but in English this time). I still have a few corrections to apply, but here is the current version.

Slides

Questions

I had a whole set of interesting questions, that I will try to summarize here. The answers given here are what I answered live for some parts, and what I should have answered for most parts. Neither my transcript nor the answers I provide on this page are perfect, but this page was too much delayed already.

On the reproducibility

I present the reproducibility of simulation studies as one of my goals, but am I sure that the simulator itself perfectly returns the exact same results each time? Even on another computer? How is proved?

My answer to this question was rather bad, because it surprised me. At first, I thought that the question was on the reproducibility of experiments built with the simulator, and that the point on the tool was a parenthesis in the question.

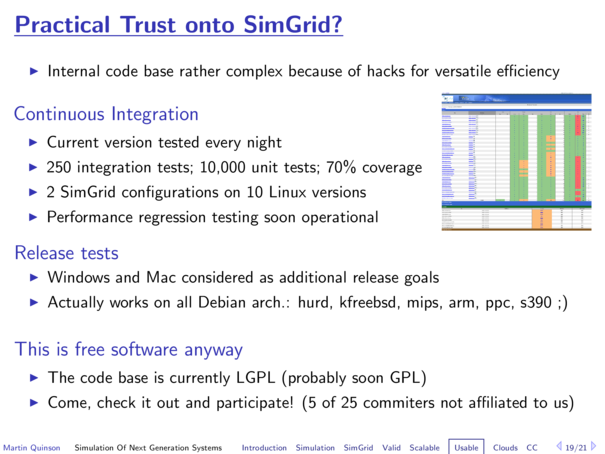

I argued that the software is sufficiently tested to be rather confident, but I was not able to prove it in any way during the defense. I should have pointed to our cdash or to our jenkins infrastructures. The simulation kernel (including the models) represent only one third of the source code in size, the rest being examples and tests. We have over 10,000 unit tests and about 250 integration tests, that are non-trivial simulation scenarios for which we check that the date at which each even is handled remains exactly the same. These tests pass on all Debian architectures (Linux kernel on 11 processor architectures, the Hurd micro-kernel on one architecture and FreeBSD kernel on 2 architectures), on several other variants of Linux (such as Fedora and Ubuntu) as well as Mac OSX. Our testing infrastructure is less developed under windows, but each time we launched the tests manually, they succeeded on this architecture family too.

These are the elements that make me confident in the reproducibility of the software tool. But I feel that these elements are ways too technical to be presented in my manuscript or in my presentation. I speak about it when I present the tool as is (as in Louvain last November, where the slide reproduced below was dedicated to these considerations), but not in here.

For some (probably stupid) reasons, I was reluctant to dive into the technical

details where the examiner wanted to drive me during this Habilitation

defense. And even more without that particular slide that was not part of my set

of additional slides. The resulting tension was very tangible, as reported then

by my wife

Gabriel was right: we did not prove that our tool is not 100% reproducible on all situations on all architectures. And actually, it is not. For example, we use double values, so architecture difference may result in some changes in some (border) cases.

The right answer would have been that SimGrid is "reproducible enough". For example, would you believe that an accelerometer or a NMR spectrometer can be proved to be 100% reproductible in subtly different situations? It's impossible, but scientists get past such limitations by taking them into account in their methodology. As for SimGrid, you should not aim to perfectly reproduce the experimental results to the fifth decimal, but rather ensure that the same set of experiments will lead to the same scientific conclusions.

I feel bad my answer to this question was so partial...

As a side note, we plan to remove the doubles that are currently used to represent the dates and use integer instead. This would offer a better control of the time discretization, that sometimes hurt us. We experienced small numerical stability issues in the past, but so far we managed to fix them rather easily. More surprisingly, this also strikes in the parallel simulation setting as this tends to increase the amount of timestamps to handle in the simulation. Spreads the work (that is constant) over more timestamps (where parallel handling occur) tend to reduce the amount of potential parallelism. We speak of using integer date since a few years, but never found the time to do so for now, unfortunately. This would not be a definitive answer to the tool reproducibility, but yet another argument in this direction.

On the Models

Gabriel also asked more details about the models. This question should have been expected, as this is about the main difference between SimGrid and the classical DEVS simulators that Gabriel usually works on.

In SimGrid, we have very specific ways to express the application and platform: the application is simply given by the users as a sort set of entities that interact through message passing. This can be seen as a multi-threaded system where threads only interact through message passing. Then, the platform dynamics, that depends on the behavior of TCP of the CPU or whatever, is actually hard-coded in SimGrid. We would like to make them easily modifiable by the users, but we are not quite there yet and (almost?) all our users take our platform models unchanged. The tool realism and accuracy that we sell in the presentations come from these platform models.

On the contrary, DEVS simulator are completely agnostic simulators where both the application and the platform are expressed in a very similar way. The question of Gabriel was probably to see whether the good performance exhibited by SimGrid could be reused for more general simulations, as in DEVS systems. This is not trivial, as we really leverage the specific structure of simulators that are dedicated to the simulation of distributed applications. But we have a work plan on this very aspect within the SONGS project. Here is what we wrote on this topic in the proposal: SimGrid offers its own set of specialized and optimized tools for Modeling & Simulation, with a strong emphasis on execution performance. However, during the early stages of a simulation study, simulation performance is less critical than modeling agility. Hence, users may need a flexible "sandbox" framework that allows them to quickly design new prototype models and validate their modeling assumptions. For this purpose we propose to use the DEVS framework, a well established discrete-event simulation formalism. Numerous simulation tools have been built for this formalism which provides the "sandboxing" flexibility needed in SimGrid for free. As a counterpart, we need to design and develop conversion tools between the DEVS sandboxing models and the SimGrid high performance modeling APIs. This tasks aims at providing this seamless integration of the DEVS framework within SimGrid. This work is not done yet, but that's no reason to not speak of it when asked about it. Sorry.

To further complicate things, we use another formalism when we speak of model checking: the model is then application, not the platform anymore. This "potentially" misleading vocabulary was not clear to me before the question session, explaining that my answer to this question too was a bit intermixed. It's much better for me now, and I hope that this little text makes it better for others too.

Tool Versatility

The last question of Gabriel was that most of my examples were about P2P systems while I was saying that the simulator was generic and versatile. Indeed, the chord protocol is over-represented in my slides, but SimGrid was actually used in other contexts, as demonstrated by the last three additional slides.

This question was also a reformulation of the previous one, and I must say that studying TCP modeling within SimGrid is much harder than in a DEVS framework since our platform models are meant for efficiency, not for ease of modification. In that sense, SimGrid may become even more versatile. We're on it -- stay turned.

Accurate Modeling of Computations

I got some questions on the modeling of computations, that is a very though topic. Jean-François was wondering whether we could reuse some internal structures that the compiler builds out of the application to improve the prediction accuracy, but I soon got to my specialization limits in this area. Arnaud is the modeling master out there, and we will have to wait for his own Habilitation to get more definitive answers on this.

SimGrid in Teaching Settings

I was asked whether SimGrid could be used in teaching settings. Unfortunately, we don't have enough of such things for now. I contacted the author of this lecture collection, proposing to add some exercises based on SimGrid. The idea was warmly welcomed, and this is now one of the multiple projects I should push further. The way Kompics is used in teachings is also inspiring.

Actually, there was a large part on such considerations in an IOF project proposal that I wrote in 2009. I was planning to visit Henri for one year to benefit of his mentoring in order to write my habilitation. The broad topic was simulation and Exascale (already), and SMPI was seen mainly as an educational tool as we were not sure at this point that it could evolve into actually usable to predict performance. But the project got rejected by its reviewers, and only some parts of the work plan were implemented in between; This educational dimension got a bit neglected for now.

So much to do and so few time

High Performance Simulation and Hardware Accelerators

Jean-François also asked whether we considered leveraging GPUs and other hardware accelerators to further speedup SimGrid. I'm not sure. Last time I've checked, these systems were really fast on very regular problems, but rather inefficient on irregular ones. As SimGrid simulations are clearly irregular, I didn't investigate further. Jean-François was meaning that this is evolving with recent accelerators. I'll have to look again what's doable here, then.

Very Long Term Simulations

Pierre asked whether SimGrid could be used to predict what will happen in the system after a few years of operations. My answer was that I'm confident in the fact that the tool will manage to do such computation efficiently, but I'm not sure I'd trust the computed result.

For me, this is as for weather forecasting: with time, the little divergences of your model with reality become too important to trust the simulations. I'm thinking of it since my PhD actually, as we had a very good presentation on the epistemology of weather forecasts. They are using very specific techniques to predict further in the future: they don't run a single simulation but use use a set of models and initial states with some noise artificially added. The outcomes are then compared to evaluate the confidence you can have in the resulting longer term predictions.

We should probably do the same for the predictions of distributed systems, but that's something that we never really dug into so far.

Scalability in Applicative Workload

Pierre also noted that the scalability results I presented are posed in term of amount of participating nodes. I agree that this is a bit artificial: such systems certainly exhibit enough symmetries to reduce the need of simulations with several millions of nodes. I must confess that I'm not sure that we learn really more from a Chord simulation with 10 million processes than from a simulation with only 100,000 processes, actually.

Yet, we did so because we wanted to show the raw performance of the simulator. The performance of the studied systems become more significant in other kind of studies and may even mask the performance of the kernel itself in some situations. The intended message is something like "no matter what study you plan to conduct, you can trust the performance of the simulation kernel and think about your own code instead".

Another answer is that some of our users reported that SimGrid is also scalable in the size of the applicative workload. Some colleagues at CERN use it to simulate the data placement strategy used for LHC data. They happily simulate systems with millions of files and billions of requests.

Nobody attempted to study the scalability of Content-Centric Networks with SimGrid so far, but this may change in the near future as I have a colleague here in Nancy on this thematic. He asked me a bunch of questions about SimGrid already; we will see how this evolves.

Practical Efficiency of Model Checking

In my presentation, I stressed a bit on the difficulties raised by the model checking approach. My point was to say that we still have a lot of work in this area, but I was probably too bold in my criticisms. I then had to justify this choice of methodology during the session of questions.

It was rather easy for me, as I am deeply convinced of the potential of this approach. This can be seen as 'experimental proofs' of the system properties, which is very in line with the rest of my work. In addition to my personal conviction, I pointed on the wild bugs that we found with this methodology (ie bugs that were not added artificially but that were there despite our previous efforts to fix them manually), showing its practical efficiency in our case.

Energy Considerations

Thierry noted that the main performance metric I used in my figures were time. He was wondering whether SimGrid could be used to predict the dissipated energy by a given setting. There is some work ongoing on this thematic, for example by Anne-Cécile Orgerie and George Da Costa. We are working closely with them, and I hope that some of these works will soon get integrated into SimGrid.

Even if I don't plan to work on this point myself, this is very important to me. As I often say, Science is definitely a team game. Individuals are not sufficient anymore, no matter their motivation or personal qualities.

Methodology Convergence

I naturally got a whole lot of questions on the general scientific workflow and on the compared advantages of each methodologies. This was the occasion to present our dream with Lucas (see slide 47 above), where experimental facilities, simulation and emulation would be used together. That's a long term goal, much broader than what I could achieve myself, but it's still a very appealing idea.

This led to interesting discussions on how to compare several existing scientific instruments, during the question session but also after, during the snack afterward. Computer science is not used to be an experimental science, and we clearly have a long way to go until all these considerations are natural to us.

Photos

Some friends took photos during this event, and I reproduce some of them here.

PS : oui, à la fin, j'ai passé l'épreuve avec succès (yeah, at the end I got received).