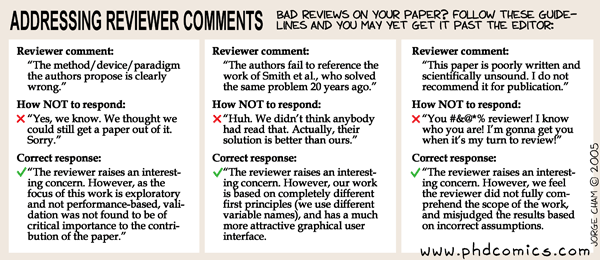

A few years ago, we came up with the Bastard Reviewer From Hell. That's inspired from the Bastard Operator From Hell, who's doing everything to NOT help, such as advising users to type "rm -rf ~" when they're complaining about the mass of spam they have in their mailbox. His excuses are hilarious, such as "Parallel processors running perpendicular today" or "Firewall needs cooling". For fun, we decided to invent similar funky excuses to refuse an article as a reviewer. Over the years, we completed the list and I'd say that we have now some very funny elements, such as "You shoudl let a native english speaker reads the paper to checking the ortographe and grammar of the paper" or "The practical effectiveness of the algorithm may be somewhat overstated since the experimental results prove its inability to fulfill its goals". We are not the only ones making fun of the reviewing process, and PhD comics is full of strips about the reviewing process.

But since a while, I tend to think that it went a bit too far. I feel depressed when I think of the time I inject in the reviews I write and the bad quality of the reviews I actually get as an author. It's tempting to go a step further and actually write ultra short and violent reviews on the bad papers I get, just to improve the process on my side. I know people who are even proud of being that efficient while writing reviews actually deserving to be added to the BRFH list.

I personally never went that way and keep doing long and as informative reviews as I can. Simply, I was getting bad feelings about it, as if I were spending too much time on each review, as if I were just too stupid to optimize the process to improve my own benefit. As a result, I'm accepting less and less often to review others' papers, and rarely do it on time. That's not quite a good solution either, particularly since I'm not choosing the papers I review based on their intrinsic quality, adequation to my own research or the conference they target, but based on my friendship relation with the PC member asking me to do so. Plainly wrong I guess. Also, I quite refuse to write reviews like "ok the paper quality is basically good even if a LOT could be improved" and tend to refuse a lot of the papers that I'm asked to review. Being a BRFH is definitely not something I appreciate, but what to do when the paper's bad?

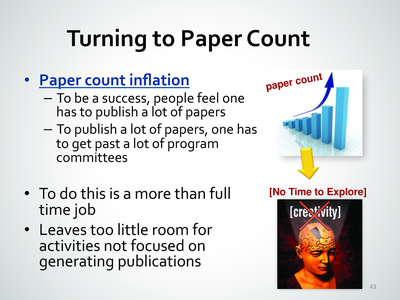

I recently came across a brilliant presentation on this topic (use acroread to read it, free readers seem to be fooled at some point. Yes, pity). The author is stating that the community is dying because of three problems: low acceptance rates, emphasis on paper count and bad reviewing.

The first two points force some of us spend more time on paper writing than on actual research. Also, we're not seeking for interesting problems to solve anymore, but for the minimal publishable article.

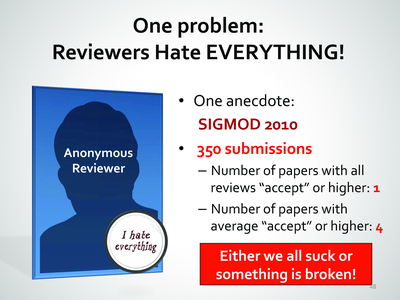

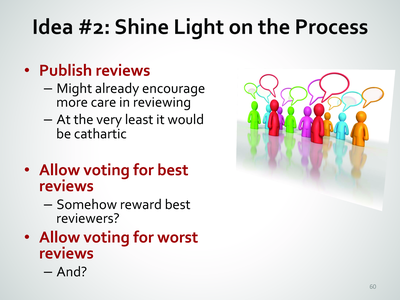

The slides about bad reviews are very very enlightening. It really helped me understanding my own difficulties about the process, and they are even funny!

Some solution are even proposed. One of them is to remove the reviews all together and publish everything. After all, why are we still pretending that we have a space issue forcing us to select what gets published? Disk space is quite cheap nowadays. That'd solve all the three problems since nobody would be glad of writing a lot of papers if it'd be that easy to get them accepted, and people would focus on paper quality again.

Another solution is to stop doing anonymous reviews. That is to say that the authors would know who criticized their papers, and the reviewers would know that the authors know. Actually, that's sometimes already the case, as when you get a review saying that you didn't cite the work of a given dude enough for example. Chances are high that you got reviewed by this very dude.

But I do think that a bit of official transparency would be beneficial to the

scientific community (and avoid false assumption on your reviewer's identity

when you get revenge). In some sense, that would allow the community to

properly review the reviewers, which should be effective in "educating" the bad

ones. We could call it Open Review, that'd be cool

That's decided, I'll fight whenever I can to push this idea of Open Review to the conference where I'm asked to act as PC member. For the time being, here is the review I did for the HeteroPar workshop. I wrote it with all these ideas in mind and even if it was for a workshop, I refused to do a cheap review. I still feel strange about putting it on a web page, but I'm strongly convinced that Open Reviewing is a good thing. It'd be less odd if it were said so from the beginning, but anyway.

The next step will be to extend my Publications page to add the reviews I got for each paper. At least the reviews I still have, I tend to erase mails from time to time. My final goal would be to help other deciding where to submit their papers to get the best possible reviews, regardless of the so called impact of the journals. That could be a first step to a sane scientific community.

And while I'm at it, I should add the experimental data and all the source code alongside with my publications. That would be an effective step toward open science, that we are officially targeting with SimGrid since years (shame, shame).

Updates

To find more on similar ideas, you should check http://slowscience.fr (in French). I'm not ok with all the ideas that are presented on this web page (in particular, I still don't get why people who manage to use the new technologies should be handicaped of direct social interaction), but they have some good and interesting ideas. They have a text in english that should summarize their ideas (but I didn't read it yet).

Also in French, you should absolutely check this ppt presentation entitled "Le paysage français de la recherche en 2011". They use Bruegel drawings to represent the current landscape of the French research, as in the next drawing.