Directors Lens

Principle

Directors Lens is a new tool designed for animators and film directors to interactively explore a large collection of shots over their 3D animation, and rapidly create and compare multiple edits of the same animation. Designed in mind for virtual production and rapid prototyping of synthetic movies, the tool assists the filmmaker in exploring the cinematographic possibilities of his 3D scene.

More details are available here

Directors Lens .

Features

- Automatic generation of stereotypical shots including: internal, external, apex, subjective, with ranges from Extreme Long Shots to Extreme Close-ups, and with medium, high and low angles

- Possibility of navigating through the set of shots depending on the targets to frame, the size of the shot, the type of shot, the visibility of targets (and more)

- Ability to store a frame composition, and re-apply it on different targets

- Ability to record free camera motions, and reapply them on different targets.

- Ability to connect with a Virtual Camera System

Directors Lens Motion Builder plugin

Directors Lens is now implemented as a plugin in Motion Builder 2014. More details are available here

Directors Lens .

Here is a video quickly edited from our FMX session!

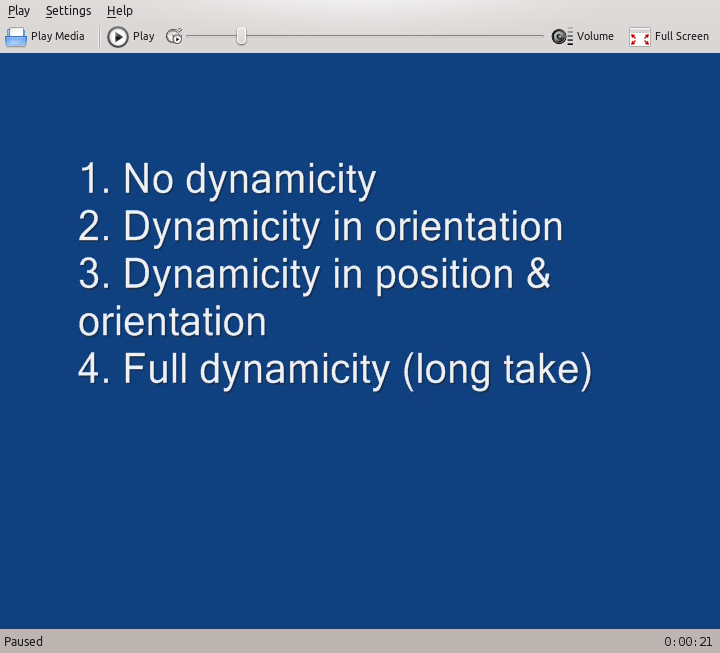

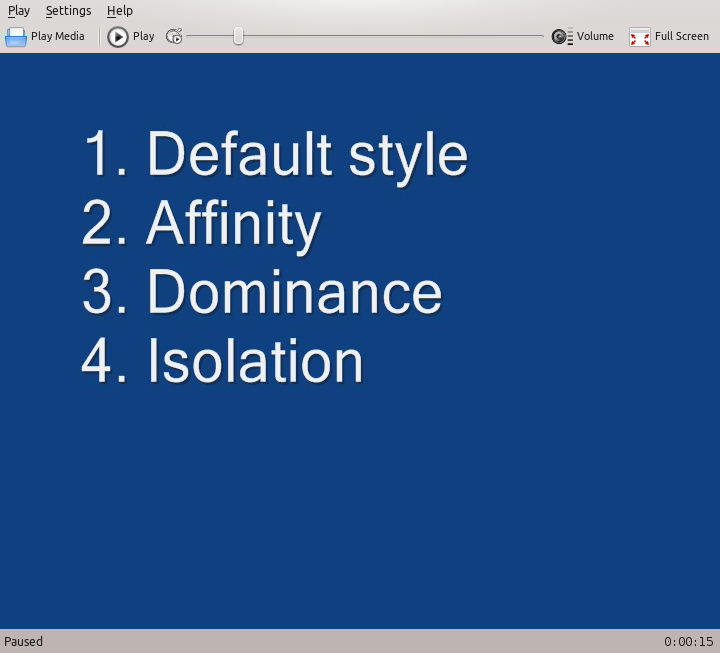

Our process relies on a viewpoint space partitioning technique in 2D that identifies characteristic viewpoints of

relevant actions for which we compute the partial and full visibility. These partitions, to which we refer as Director

Volumes, provide a full characterization over the space of viewpoints. We build upon this spatial characterization

to select the most appropriate director volumes, reason over the volumes to perform appropriate camera cuts and

rely on traditional path-planning techniques to perform transitions. Our system represents a novel and expressive

approach to cinematic camera control which stands in contrast to existing techniques that are mostly procedural,

only concentrate on isolated aspects (visibility, transitions, editing, framing) or do not encounter for variations in

directorial style.

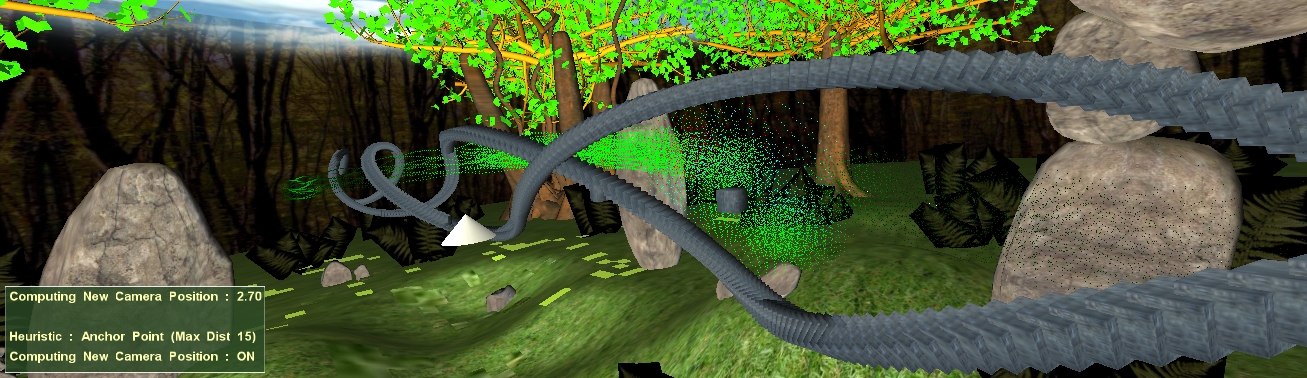

Occlusion-free Camera Control

Abstract

Computing and maintaining the visibility of target objects is a fundamental problem in the the design of automatic camera control schemes for {3D} graphics applications. Most real-time camera control algorithms only incorporate mechanisms for the evaluation of the visibility of target objects from a single viewpoint, and typically idealise the geometric complexity of target objects in doing so. We present a novel approach to the real-time evaluation of the visibility of multiple target objects which simultaneously computes their visibility for a large sample of points. The visibility computation step involves performing a low resolution projection from points on pairs of target objects onto a plane parallel to the pair and behind the camera. By combining the depth buffers for these projections the joint visibility of the pair can be rapidly computed for a sample of locations around the current camera position. This pair-wise computation is extended for three or more target objects and visibility results

aggregated in a temporal window to mitigate over-reactive camera behaviour. To address the target object geometry idealisation problem we use a stochastic approximation of the physical extent of target objects by selecting projection points randomly from the visible surface of the target object. We demonstrate the efficiency of the approach for a number of problematic and complex scene configurations in real-time for both two and three target objects.

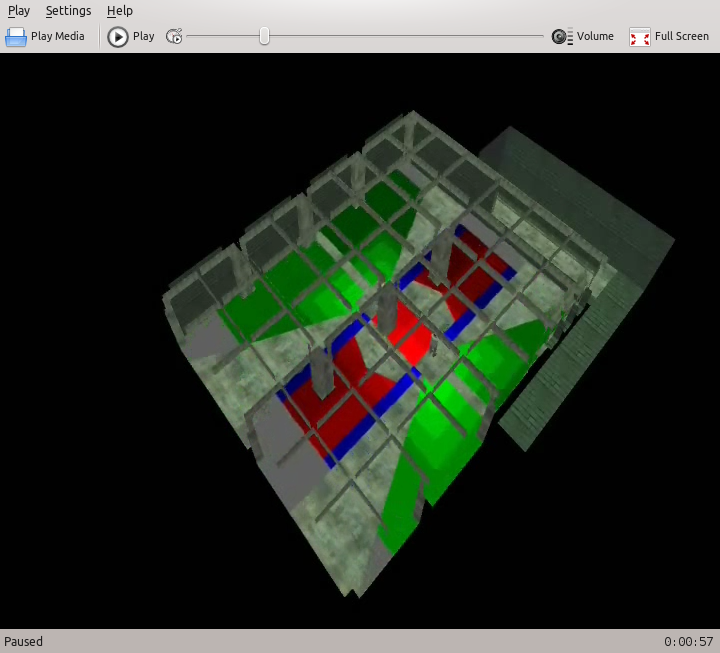

Illustrations and videos

A Constraint-Based Approach to Camera Path Planning

Abstract

The hypertube paradigm is a new constraint-based approach to camera control. In this framework, the user acts as a film director. He provides (1) a sequence of elementary and generic camera movements to model the shot, and (2) properties such as locations or orientations of objects on the screen. The goal is to instantiate the given camera movements that verify the desired properties. The geometrical description of the scene (dynamic of characters and objects) is generally given by a modeler and is an input to our system.

We introduce a set of elementary camera movements, the cinematographic primitives called hypertubes, that allow the modeling of arbitrary movements by simple composition.

Illustrations

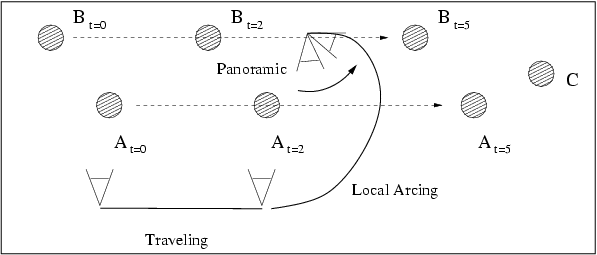

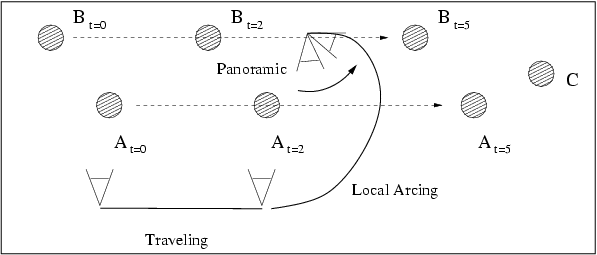

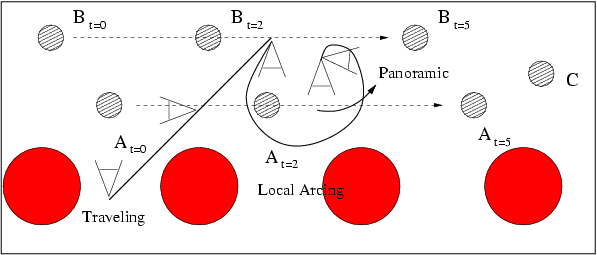

The first example relies on a 3D scene composed of two mobile characters (A and B) and a static object C. The user's description is the following (leftFrame, rightFrame and middleFrame are user-defined frames):

|

Traveling("trav", 2)

|

create a 2 sec. traveling

|

|

LArc('arc', 'A', 1)

|

create a 1 sec. arcing around object A

|

|

Pan('pan', 2)

|

create a 2 sec. pan

|

|

Connect('trav', 'arc')

|

connect movements together

|

|

Connect('arc', 'pan')

|

connect movements together

|

|

Frame('A', rightFrame , [0..5])

|

"right-frame" object A during the whole sequence (5 s.)

|

|

Orient('A', RIGHT-SIDE, 0)

|

see A's right side at the beginning

|

|

Frame('B', leftFrame , 5);

|

"left-frame" object B at the end of the sequence

|

|

Frame('C', middleFrame , 5);

|

"middle-frame" object C at the end of the sequence

|

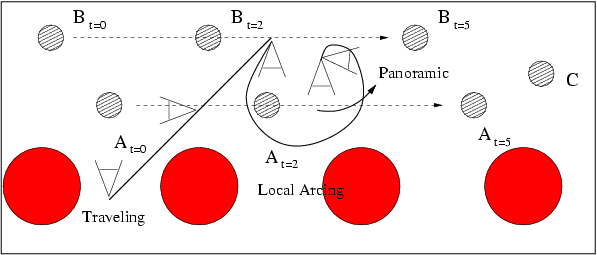

The second example adds the following properties to the first example:

|

Collision([0..5])

|

avoid collisions for the whole sequence

|

|

Occlusion('A', [0..5])

|

avoid occlusion of A for the whole sequence

|

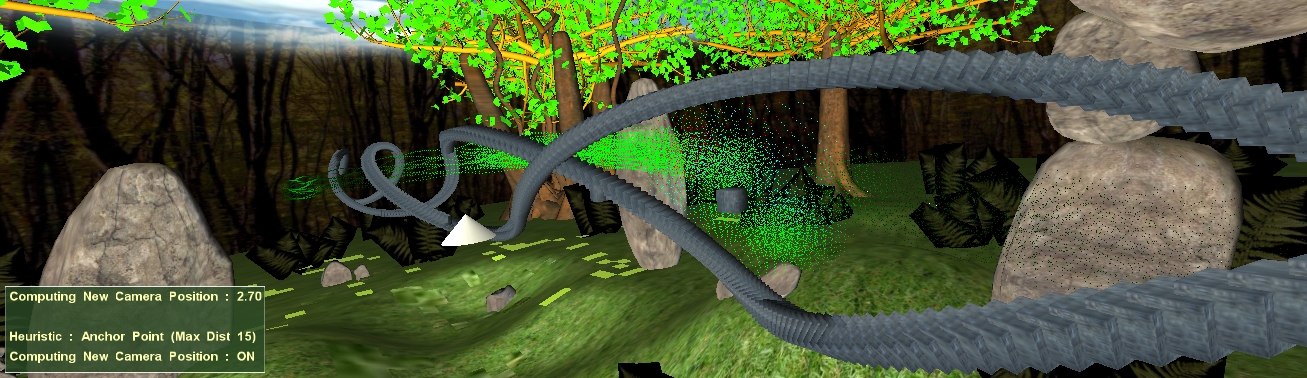

Results of Example #1 (at the top) and #2 (at the bottom) based on a Traveling, a Local Arcing and a Panoramic (red circles represent the obstacles). Due to the obstacles, the second path is entirely different yet the properties are preserved. Execution times are respectively 3170 ms and 6090 ms.

The following stamps present the results of Example #2.