Franck Multon

Professor University Rennes 2 - Inria

2015

New Lower-Limb Gait Asymmetry Indices Based on a Depth Camera. E Auvinet, F Multon, J Meunier. Sensors 15 (3), 4605-4623

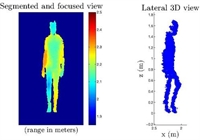

Background: Various asymmetry indices have been proposed to compare the spatiotemporal, kinematic and kinetic parameters of lower limbs during the gait cycle. However, these indices rely on gait measurement systems that are costly and generally require manual examination, calibration procedures and the precise placement of sensors/markers on the body of the patient. Methods: To overcome these issues, this paper proposes a new asymmetry index, which uses an inexpensive, easy-to-use and markerless depth camera (Microsoft Kinect™) output. This asymmetry index directly uses depth images provided by the Kinect™ without requiring joint localization. It is based on the longitudinal spatial difference between lower-limb movements during the gait cycle. To evaluate the relevance of this index, fifteen healthy subjects were tested on a treadmill walking normally and then via an artificially-induced gait asymmetry with a thick sole placed under one shoe. The gait movement was simultaneously recorded using a Kinect™ placed in front of the subject and a motion capture system. Results: The proposed longitudinal index distinguished asymmetrical gait (p < 0.001), while other symmetry indices based on spatiotemporal gait parameters failed using such Kinect™ skeleton measurements. Moreover, the correlation coefficient between this index measured by Kinect™ and the ground truth of this index measured by motion capture is 0.968. Conclusion: This gait asymmetry index measured with a Kinect™ is low cost, easy to use and is a promising development for clinical gait analysis.

Background: Various asymmetry indices have been proposed to compare the spatiotemporal, kinematic and kinetic parameters of lower limbs during the gait cycle. However, these indices rely on gait measurement systems that are costly and generally require manual examination, calibration procedures and the precise placement of sensors/markers on the body of the patient. Methods: To overcome these issues, this paper proposes a new asymmetry index, which uses an inexpensive, easy-to-use and markerless depth camera (Microsoft Kinect™) output. This asymmetry index directly uses depth images provided by the Kinect™ without requiring joint localization. It is based on the longitudinal spatial difference between lower-limb movements during the gait cycle. To evaluate the relevance of this index, fifteen healthy subjects were tested on a treadmill walking normally and then via an artificially-induced gait asymmetry with a thick sole placed under one shoe. The gait movement was simultaneously recorded using a Kinect™ placed in front of the subject and a motion capture system. Results: The proposed longitudinal index distinguished asymmetrical gait (p < 0.001), while other symmetry indices based on spatiotemporal gait parameters failed using such Kinect™ skeleton measurements. Moreover, the correlation coefficient between this index measured by Kinect™ and the ground truth of this index measured by motion capture is 0.968. Conclusion: This gait asymmetry index measured with a Kinect™ is low cost, easy to use and is a promising development for clinical gait analysis.

Pose Estimation with a Kinect for Ergonomic Studies: Evaluation of the Accuracy Using a Virtual Mannequin. P Plantard, E Auvinet, AS Le Pierres, F Multon. Sensors 15, 1785-1803

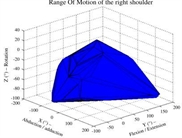

Analyzing human poses with a Kinect is a promising method to evaluate potentials risks of musculoskeletal disorders at workstations. In ecological situations, complex 3D poses and constraints imposed by the environment make it difficult to obtain reliable kinematic information. Thus, being able to predict the potential accuracy of the measurement for such complex 3D poses and sensor placements is challenging in classical experimental setups. To tackle this problem, we propose a new evaluation method based on a virtual mannequin. In this study, we apply this method to the evaluation of joint positions (shoulder, elbow, and wrist), joint angles (shoulder and elbow), and the corresponding RULA (a popular ergonomics assessment grid) upper-limb score for a large set of poses and sensor placements. Thanks to this evaluation method, more than 500,000 configurations have been automatically tested, which would be almost impossible to evaluate with classical protocols. The results show that the kinematic information obtained by the Kinect software is generally accurate enough to fill in ergonomic assessment grids. However inaccuracy strongly increases for some specific poses and sensor positions. Using this evaluation method enabled us to report configurations that could lead to these high inaccuracies. As a supplementary material, we provide a software tool to help designers to evaluate the expected accuracy of this sensor for a set of upper-limb configurations. Results obtained with the virtual mannequin are in accordance with those obtained from a real subject for a limited set of poses and sensor placements.

Analyzing human poses with a Kinect is a promising method to evaluate potentials risks of musculoskeletal disorders at workstations. In ecological situations, complex 3D poses and constraints imposed by the environment make it difficult to obtain reliable kinematic information. Thus, being able to predict the potential accuracy of the measurement for such complex 3D poses and sensor placements is challenging in classical experimental setups. To tackle this problem, we propose a new evaluation method based on a virtual mannequin. In this study, we apply this method to the evaluation of joint positions (shoulder, elbow, and wrist), joint angles (shoulder and elbow), and the corresponding RULA (a popular ergonomics assessment grid) upper-limb score for a large set of poses and sensor placements. Thanks to this evaluation method, more than 500,000 configurations have been automatically tested, which would be almost impossible to evaluate with classical protocols. The results show that the kinematic information obtained by the Kinect software is generally accurate enough to fill in ergonomic assessment grids. However inaccuracy strongly increases for some specific poses and sensor positions. Using this evaluation method enabled us to report configurations that could lead to these high inaccuracies. As a supplementary material, we provide a software tool to help designers to evaluate the expected accuracy of this sensor for a set of upper-limb configurations. Results obtained with the virtual mannequin are in accordance with those obtained from a real subject for a limited set of poses and sensor placements.

2014

Third Person View And Guidance For More Natural Motor Behaviour In Immersive Basketball Playing. A. Covaci, AH. Olivier, F. Multon . Proceedings of the 20th ACM Symposium on Virtual Reality Software and Technology VRST'2014 , pages 55-64 - Best Paper Award VRST'2014

The use of Virtual Reality (VR) in sports training is now widely studied with the perspective to transfer motor skills learned in virtual environments (VEs) to real practice. However precision motor tasks that require high accuracy have been rarely studied in the context of VE, especially in Large Screen Image Display (LSID) platforms. An example of such a motor task is the basketball free throw, where the player has to throw a ball in a 46cm wide basket placed at 4.2m away from her. In order to determine the best VE training conditions for this type of skill, we proposed and compared three training paradigms. These training conditions were used to compare the combinations of different user perspectives: first (1PP)

and third-person (3PP) perspectives, and the effectiveness of visual guidance. We analysed the performance of eleven amateur subjects who performed series of free throws in a real and immersive 1:1 scale environment under the proposed conditions. The results show that ball speed at the moment of the release in 1PP was significantly lower compared to real world, supporting the hypothesis that distance is underestimated in large screen VEs. However ball speed in 3PP condition was more similar to the real condition, especially if combined with guidance feedback. Moreover, when guidance information was proposed, the subjects released the ball at higher - and closer to optimal - position (5-7% higher compared to no-guidance conditions). This type of information contributes to better understand the impact of visual feedback on the motor performance of users who wish to train motor skills using immersive environments. Moreover, this information can be used by exergamesdesigners who wish to develop coaching systems to transfer motor skills learned in VEs to real practice.

The use of Virtual Reality (VR) in sports training is now widely studied with the perspective to transfer motor skills learned in virtual environments (VEs) to real practice. However precision motor tasks that require high accuracy have been rarely studied in the context of VE, especially in Large Screen Image Display (LSID) platforms. An example of such a motor task is the basketball free throw, where the player has to throw a ball in a 46cm wide basket placed at 4.2m away from her. In order to determine the best VE training conditions for this type of skill, we proposed and compared three training paradigms. These training conditions were used to compare the combinations of different user perspectives: first (1PP)

and third-person (3PP) perspectives, and the effectiveness of visual guidance. We analysed the performance of eleven amateur subjects who performed series of free throws in a real and immersive 1:1 scale environment under the proposed conditions. The results show that ball speed at the moment of the release in 1PP was significantly lower compared to real world, supporting the hypothesis that distance is underestimated in large screen VEs. However ball speed in 3PP condition was more similar to the real condition, especially if combined with guidance feedback. Moreover, when guidance information was proposed, the subjects released the ball at higher - and closer to optimal - position (5-7% higher compared to no-guidance conditions). This type of information contributes to better understand the impact of visual feedback on the motor performance of users who wish to train motor skills using immersive environments. Moreover, this information can be used by exergamesdesigners who wish to develop coaching systems to transfer motor skills learned in VEs to real practice.

Detection of gait cycles in treadmill walking using a Kinect . E. Auvinet, F. Multon, J. Meunier, M. Raison, CE. Aubin. Gait and Posture, 2014, p. 15.

Treadmill walking is commonly used to analyze several gait cycles in a limited space. Depth cameras, such as the low-cost and easy-to-use Kinect sensor, look promising for gait analysis on a treadmill for routine outpatient clinics. However, gait analysis is based on accurately detecting gait events (such as heel-strike) by tracking the feet which may be incorrectly recognized with Kinect. Indeed depth images could lead to confusion between the ground and the feet around the contact phase. To tackle this problem we assume that heel-strike events could be indirectly estimated by searching for extreme values of the distance between knee joints along the walking longitudinal axis. To evaluate this assumption, the motion of 11 healthy subjects walking on a treadmill was recorded using both an optoelectronic system and Kinect. The measures were compared to reference heel-strike events obtained with vertical foot velocity. When using the optoelectronic system to assess knee joints, heel-strike estimation errors were very small (29 ± 18ms) leading to small cycle durations errors (0 ± 15ms). To locate knees in depth map (Kinect), we used anthropometrical data to select the body point located at a constant height where the knee should be based on a reference posture. This Kinect approach gave heel-strike errors of 17 ± 24ms (mean cycle duration error: 0 ± 12ms). Using this same anthropometric methodology with optoelectronic data, the heel-strike error was 12 ± 12ms (mean cycle duration error: 0 ± 11ms). Compared to previous studies using Kinect, heel-strike and gait cycles were more accurately estimated, which could improve clinical gait analysis with such sensor.

Treadmill walking is commonly used to analyze several gait cycles in a limited space. Depth cameras, such as the low-cost and easy-to-use Kinect sensor, look promising for gait analysis on a treadmill for routine outpatient clinics. However, gait analysis is based on accurately detecting gait events (such as heel-strike) by tracking the feet which may be incorrectly recognized with Kinect. Indeed depth images could lead to confusion between the ground and the feet around the contact phase. To tackle this problem we assume that heel-strike events could be indirectly estimated by searching for extreme values of the distance between knee joints along the walking longitudinal axis. To evaluate this assumption, the motion of 11 healthy subjects walking on a treadmill was recorded using both an optoelectronic system and Kinect. The measures were compared to reference heel-strike events obtained with vertical foot velocity. When using the optoelectronic system to assess knee joints, heel-strike estimation errors were very small (29 ± 18ms) leading to small cycle durations errors (0 ± 15ms). To locate knees in depth map (Kinect), we used anthropometrical data to select the body point located at a constant height where the knee should be based on a reference posture. This Kinect approach gave heel-strike errors of 17 ± 24ms (mean cycle duration error: 0 ± 12ms). Using this same anthropometric methodology with optoelectronic data, the heel-strike error was 12 ± 12ms (mean cycle duration error: 0 ± 11ms). Compared to previous studies using Kinect, heel-strike and gait cycles were more accurately estimated, which could improve clinical gait analysis with such sensor.

Using task efficient contact configurations to animate creatures in arbitrary environments. S. Tonneau, J. Pettré, F. Multon. Computers and Graphics, 2014, 45, p. 10.

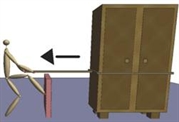

A common issue in three-dimensional animation is the creation of contacts between a virtual creature and the environment. Contacts allow force exertion, which produces motion. This paper addresses the problem of computing contact configurations allowing to perform motion tasks such as getting up from a sofa, pushing an object or climbing. We propose a two-step method to generate contact configurations suitable for such tasks. The first step is an offline sampling of the reachable workspace of a virtual creature. The second step is a run time request confronting the samples with the current environment. The best contact configurations are then selected according to a heuristic for task efficiency. The heuristic is inspired by the force transmission ratio. Given a contact configuration, it measures the potential force that can be exerted in a given direction. Our method is automatic and does not require examples or motion capture data. It is suitable for real time applications and applies to arbitrary creatures in arbitrary environments. Various scenarios (such as climbing, crawling, getting up, pushing or pulling objects) are used to demonstrate that our method enhances motion autonomy and interactivity in constrained environments.

A common issue in three-dimensional animation is the creation of contacts between a virtual creature and the environment. Contacts allow force exertion, which produces motion. This paper addresses the problem of computing contact configurations allowing to perform motion tasks such as getting up from a sofa, pushing an object or climbing. We propose a two-step method to generate contact configurations suitable for such tasks. The first step is an offline sampling of the reachable workspace of a virtual creature. The second step is a run time request confronting the samples with the current environment. The best contact configurations are then selected according to a heuristic for task efficiency. The heuristic is inspired by the force transmission ratio. Given a contact configuration, it measures the potential force that can be exerted in a given direction. Our method is automatic and does not require examples or motion capture data. It is suitable for real time applications and applies to arbitrary creatures in arbitrary environments. Various scenarios (such as climbing, crawling, getting up, pushing or pulling objects) are used to demonstrate that our method enhances motion autonomy and interactivity in constrained environments.

Task Efficient Contact Configurations for Arbitrary Virtual Creatures. S. Tonneau, J. Pettré, F. Multon. Proceedings of Graphics Interface'2014, p. 9-16, Montreal (CA)

A common issue in three-dimensional animation is the creation of contacts between a virtual creature and the environment. Contacts allow force exertion, which produces motion. This paper addresses the problem of computing contact configurations allowing to perform motion tasks such as getting up from a sofa, pushing an object or climbing. We propose a two-step method to generate contact configurations suitable for such tasks. The first step is an offline sampling of the reachable workspace of a virtual creature. The second step is a run time request confronting the samples with the current environment. The best contact configurations are then selected according to a heuristic for task efficiency. The heuristic is inspired by the force transmission ratio. Given a contact configuration, it measures the potential force that can be exerted in a given direction. Our method is automatic and does not require examples or motion capture data. It is suitable for real time applications and applies to arbitrary creatures in arbitrary environments. Various scenarios (such as climbing, crawling, getting up, pushing or pulling objects) are used to demonstrate that our method enhances motion autonomy and interactivity in constrained environments.

A common issue in three-dimensional animation is the creation of contacts between a virtual creature and the environment. Contacts allow force exertion, which produces motion. This paper addresses the problem of computing contact configurations allowing to perform motion tasks such as getting up from a sofa, pushing an object or climbing. We propose a two-step method to generate contact configurations suitable for such tasks. The first step is an offline sampling of the reachable workspace of a virtual creature. The second step is a run time request confronting the samples with the current environment. The best contact configurations are then selected according to a heuristic for task efficiency. The heuristic is inspired by the force transmission ratio. Given a contact configuration, it measures the potential force that can be exerted in a given direction. Our method is automatic and does not require examples or motion capture data. It is suitable for real time applications and applies to arbitrary creatures in arbitrary environments. Various scenarios (such as climbing, crawling, getting up, pushing or pulling objects) are used to demonstrate that our method enhances motion autonomy and interactivity in constrained environments.

Toward "Pseudo-Haptic Avatars": Modifying the Visual Animation of Self-Avatar Can Simulate the Perception of Weight Lifting. DAG Jauregui, F Argelaguet, AH Olivier, M Marchal, F Multon, A Lecuyer Visualization and Computer Graphics, IEEE Transactions on 20 (4), 654-661 (IEEE VR2014)

In this paper we study how the visual animation of a self-avatar could be artificially modified in real-time in order to generate different haptic perceptions. In our experimental setup participants could watch their self-avatar in a virtual environment in mirror mode. They could map their gestures on the self-animated avatar in real-time using a Kinect. The experimental task consisted in a weight lifting with virtual dumbbells that participants could manipulate by means of a tangible stick. We introduce three kinds of modification of the visual animation of the self-avatar: 1) an amplification (or reduction) of the user motion (change in C/D ratio), 2) a change in the dynamic profile of the motion (temporal animation), or 3) a change in the posture of the avatar (angle of inclination). Thus, to simulate the lifting of a ”heavy” dumbbell, the avatar animation was distorted in real-time using: an amplification of the user motion, a slower dynamics, and a larger angle of inclination of the avatar. We evaluated the potential of each technique using an ordering task with four different virtual weights. Our results show that the ordering task could be well achieved with every technique. The C/D ratio-based technique was found the most efficient. But participants globally appreciated all the different visual effects, and best results could be observed in the combination configuration. Our results pave the way to the exploitation of such novel techniques in various VR applications such as for sport training, exercise games, or industrial training scenarios in single or collaborative mode.

In this paper we study how the visual animation of a self-avatar could be artificially modified in real-time in order to generate different haptic perceptions. In our experimental setup participants could watch their self-avatar in a virtual environment in mirror mode. They could map their gestures on the self-animated avatar in real-time using a Kinect. The experimental task consisted in a weight lifting with virtual dumbbells that participants could manipulate by means of a tangible stick. We introduce three kinds of modification of the visual animation of the self-avatar: 1) an amplification (or reduction) of the user motion (change in C/D ratio), 2) a change in the dynamic profile of the motion (temporal animation), or 3) a change in the posture of the avatar (angle of inclination). Thus, to simulate the lifting of a ”heavy” dumbbell, the avatar animation was distorted in real-time using: an amplification of the user motion, a slower dynamics, and a larger angle of inclination of the avatar. We evaluated the potential of each technique using an ordering task with four different virtual weights. Our results show that the ordering task could be well achieved with every technique. The C/D ratio-based technique was found the most efficient. But participants globally appreciated all the different visual effects, and best results could be observed in the combination configuration. Our results pave the way to the exploitation of such novel techniques in various VR applications such as for sport training, exercise games, or industrial training scenarios in single or collaborative mode.

2013

Dealing with variability when recognizing user's performance in natural 3D gesture interfaces. A Sorel, R Kulpa, E Badier, F Multon. International Journal of Pattern Recognition and Artificial Intelligence 27 (08)

Recognition of natural gestures is a key issue in many applications including videogames and other immersive applications. Whatever is the motion capture device, the key problem is to recognize a motion that could be performed by a range of di®erent users, at an interactive frame rate. Hidden Markov Models (HMM) that are commonly used to recognize the performance of a user however rely on a motion representation that strongly a®ects the overall recognition rate of the system. In this paper, we propose to use a compact motion representation based on Morphology-Independent features and we evaluate its performance compared to classical representations. When dealing with 15 very similar upper limb motions, HMM based on Morphology-Independent features yield signi¯cantly higher recognition rate (84.9%) than classical Cartesian or angular data (70.4% and 55.0%, respectively). Moreover, when the unknown motions are performed by a large number of users who have never contributed to the learning process, the recognition rate of Morphology-Independent input feature only decreases slightly (down to 68.2% for a HMM trained with the motions of only one subject) compared to other features (25.3% for Cartesian features and 17.8% for angular features in the same conditions). The method is illustrated through an interactive demo in which three virtual humans have to interactively recognize and replay the performance of the user. Each virtual human is associated with a HMM recognizer based on the three di®erent input features.

Recognition of natural gestures is a key issue in many applications including videogames and other immersive applications. Whatever is the motion capture device, the key problem is to recognize a motion that could be performed by a range of di®erent users, at an interactive frame rate. Hidden Markov Models (HMM) that are commonly used to recognize the performance of a user however rely on a motion representation that strongly a®ects the overall recognition rate of the system. In this paper, we propose to use a compact motion representation based on Morphology-Independent features and we evaluate its performance compared to classical representations. When dealing with 15 very similar upper limb motions, HMM based on Morphology-Independent features yield signi¯cantly higher recognition rate (84.9%) than classical Cartesian or angular data (70.4% and 55.0%, respectively). Moreover, when the unknown motions are performed by a large number of users who have never contributed to the learning process, the recognition rate of Morphology-Independent input feature only decreases slightly (down to 68.2% for a HMM trained with the motions of only one subject) compared to other features (25.3% for Cartesian features and 17.8% for angular features in the same conditions). The method is illustrated through an interactive demo in which three virtual humans have to interactively recognize and replay the performance of the user. Each virtual human is associated with a HMM recognizer based on the three di®erent input features.

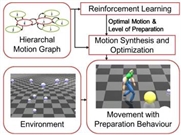

Natural Preparation Behaviour Synthesi. H. Shum, L. Hoyet, E. Ho, T. Komura, F. Multon. Computer Animation and Virtual Worlds 1546 (Sept. 2013)

Humans adjust their movements in advance to prepare for the forthcoming action, resulting in efficient and smooth transitions. However, traditional computer animation approaches such as motion graphs simply concatenate a series of actions without taking into account the following one. In this paper, we propose a new method to produce preparation behaviors using reinforcement learning. As an offline process, the system learns the optimal way to approach a target and to prepare for interaction. A scalar value called the level of preparation is introduced, which represents the degree of transition from the initial action to the interacting action. To synthesize the movements of preparation, we propose a customized motion blending scheme based on the level of preparation, which is followed by an optimization framework that adjusts the posture to keep the balance. During runtime, the trained controller drives the character to move to a target with the appropriate level of preparation, resulting in a humanlike behavior. We create scenes in which the character has to move in a complex environment and to interact with objects, such as crawling under and jumping over obstacles while walking. The method is useful not only for computer animation but also for real-time applications such as computer games, in which the characters need to accomplish a series of tasks in a given environment.

Humans adjust their movements in advance to prepare for the forthcoming action, resulting in efficient and smooth transitions. However, traditional computer animation approaches such as motion graphs simply concatenate a series of actions without taking into account the following one. In this paper, we propose a new method to produce preparation behaviors using reinforcement learning. As an offline process, the system learns the optimal way to approach a target and to prepare for interaction. A scalar value called the level of preparation is introduced, which represents the degree of transition from the initial action to the interacting action. To synthesize the movements of preparation, we propose a customized motion blending scheme based on the level of preparation, which is followed by an optimization framework that adjusts the posture to keep the balance. During runtime, the trained controller drives the character to move to a target with the appropriate level of preparation, resulting in a humanlike behavior. We create scenes in which the character has to move in a complex environment and to interact with objects, such as crawling under and jumping over obstacles while walking. The method is useful not only for computer animation but also for real-time applications such as computer games, in which the characters need to accomplish a series of tasks in a given environment.

Fast Grasp Planning by Using Cord Geometry to Find Grasping Points Y. Li, JP. Saut, A. Sahbani, P. Bidaud, J. Pettré, .F. Multon. IEEE International Conference on Robotics and Automation ICRA (2013) 3265 - 3270.

In this paper, we propose a novel idea to address the problem of fast computation of enveloping grasp configurations for a multi-fingered hand with 3D polygonal models represented as polygon soups. The proposed method performs a low-level shape matching by wrapping multiple cords around an object in order to quickly isolate promising grasping spots. From these spots, hand palm posture can be computed followed by a standard close-until-contact procedure to find the contact points. Along with the contacts information, the finger kinematics is then used to filter the unstable grasps. Through multiple simulated examples with a twelve degrees-of-freedom anthropomorphic hand, we demonstrate that our method can compute good grasps for objects with complex geometries in a short amount of time. Best of all, this is achieved without complex model preprocessing like segmentation by parts and medial axis extraction.

In this paper, we propose a novel idea to address the problem of fast computation of enveloping grasp configurations for a multi-fingered hand with 3D polygonal models represented as polygon soups. The proposed method performs a low-level shape matching by wrapping multiple cords around an object in order to quickly isolate promising grasping spots. From these spots, hand palm posture can be computed followed by a standard close-until-contact procedure to find the contact points. Along with the contacts information, the finger kinematics is then used to filter the unstable grasps. Through multiple simulated examples with a twelve degrees-of-freedom anthropomorphic hand, we demonstrate that our method can compute good grasps for objects with complex geometries in a short amount of time. Best of all, this is achieved without complex model preprocessing like segmentation by parts and medial axis extraction.

Personified and multistate camera motions for first-person navigation in desktop virtual reality. L. Terziman, M. Marchal, F. Multon, B. Arnaldi, A. Lécuyer. IEEE Transactions onVisualization and Computer Graphics 14(9):652-661. 2013.

In this paper we introduce novel “Camera Motions” (CMs) to improve the sensations related to locomotion in virtual environments (VE). Traditional Camera Motions are artificial oscillating motions applied to the subjective viewpoint when walking in the VE, and they are meant to evoke and reproduce the visual flow generated during a human walk. Our novel camera motions are: (1) multistate, (2) personified, and (3) they can take into account the topography of the virtual terrain. Being multistate, our CMs can account for different states of locomotion in VE namely: walking, but also running and sprinting. Being personified, our CMs can be adapted to avatar’s physiology such as to its size, weight or training status. They can then take into account avatar’s fatigue and recuperation for updating visual CMs accordingly. Last, our approach is adapted to the topography of the VE. Running over a strong positive slope would rapidly decrease the advance speed of the avatar, increase its energy loss, and eventually change the locomotion mode, influencing the visual feedback of the camera motions. Our new approach relies on a locomotion simulator partially inspired by human physiology and implemented for a real-time use in Desktop VR. We have conducted a series of experiments to evaluate the perception of our new CMs by naive participants. Results notably show that participants could discriminate and perceive transitions between the different locomotion modes, by relying exclusively on our CMs. They could also perceive some properties of the avatar being used and, overall, very well appreciated the new CMs techniques. Taken together, our results suggest that our new CMs could

be introduced in Desktop VR applications involving first-person navigation, in order to enhance sensations of walking, running, and sprinting, with potentially different avatars and over uneven terrains, such as for: training, virtual visits or video games.

In this paper we introduce novel “Camera Motions” (CMs) to improve the sensations related to locomotion in virtual environments (VE). Traditional Camera Motions are artificial oscillating motions applied to the subjective viewpoint when walking in the VE, and they are meant to evoke and reproduce the visual flow generated during a human walk. Our novel camera motions are: (1) multistate, (2) personified, and (3) they can take into account the topography of the virtual terrain. Being multistate, our CMs can account for different states of locomotion in VE namely: walking, but also running and sprinting. Being personified, our CMs can be adapted to avatar’s physiology such as to its size, weight or training status. They can then take into account avatar’s fatigue and recuperation for updating visual CMs accordingly. Last, our approach is adapted to the topography of the VE. Running over a strong positive slope would rapidly decrease the advance speed of the avatar, increase its energy loss, and eventually change the locomotion mode, influencing the visual feedback of the camera motions. Our new approach relies on a locomotion simulator partially inspired by human physiology and implemented for a real-time use in Desktop VR. We have conducted a series of experiments to evaluate the perception of our new CMs by naive participants. Results notably show that participants could discriminate and perceive transitions between the different locomotion modes, by relying exclusively on our CMs. They could also perceive some properties of the avatar being used and, overall, very well appreciated the new CMs techniques. Taken together, our results suggest that our new CMs could

be introduced in Desktop VR applications involving first-person navigation, in order to enhance sensations of walking, running, and sprinting, with potentially different avatars and over uneven terrains, such as for: training, virtual visits or video games.

2012

S. Brault, R. Kulpa, F. Multon, B. Bideau (2012) Deception in Sports Using Immersive Environments. IEEE Intelligent Systems, 27(6):5-7

I

I

The king-kong effects: improving sensation of walking in VR with visual and tactile vibrations at each step. L. Terziman, M. Marchal, F. Multon, B. Arnaldi, A. Lécuyer. IEEE Symposium on 3D User Interfaces (3DUI), 2012, P. 19-26

In this paper we present novel sensory feedbacks named ”King- Kong Effects” to enhance the sensation of walking in virtual environments. King Kong Effects are inspired by special effects in movies in which the incoming of a gigantic creature is suggested by adding visual vibrations/pulses to the camera at each of its steps. In this paper, we propose to add artificial visual or tactile vibrations (King-Kong Effects or KKE) at each footstep detected (or simulated) during the virtual walk of the user. The user can be seated, and our system proposes to use vibrotactile tiles located under his/her feet for tactile rendering, in addition to the visual display. We have designed different kinds of KKE based on vertical or lateral oscillations, physical or metaphorical patterns, and one or two peaks for heal-toe contacts simulation. We have conducted different experiments to evaluate the preferences of users navigating with or without the various KKE. Taken together, our results identify the best choices for future uses of visual and tactile KKE, and they suggest a preference for multisensory combinations. Our King-Kong effects could be used in a variety of VR applications targeting the immersion of a user walking in a 3D virtual scene.

1995-2011

Lower limb movement asymmetry measurement with a depth camera. E. Auvinet, F. Multon, J. Meunier

Annual International Conference of the IEEE - Engineering in Medicine and Biology Society (EMBC), 2012, p. 6793-6796

Annual International Conference of the IEEE - Engineering in Medicine and Biology Society (EMBC), 2012, p. 6793-6796

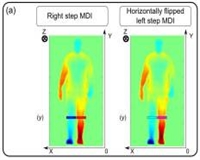

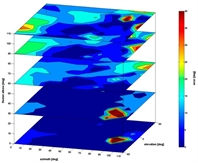

I The gait movement seems simple at first glance, but in reality it is a very complex neural and biomechanical process. In particular, if a person is affected by a disease or an injury, the gait may be modified. The left-right asymmetry of this movement can be related to neurological diseases, segment length differences or joint deficiencies. This paper proposes a novel method to analyze the asymmetry of lower limb movement which aims to be usable in daily clinical practice. This is done by recording the subject walking on a treadmill with a depth camera and then assessing left-right depth differences for the lower limbs during the gait cycle using horizontal flipping and registration of the depth images half a gait cycle apart. Validation on 20 subjects for normal gait and simulated pathologies (with a 5 cm sole), showed that this system is able to distinguish the asymmetry introduced. The major interest of this method is the low cost of the material needed and its easy setup in a clinical environment.

1995-2011

Before 2012