My primary research interests focus on analysis and sythesis of motion, modeling and animation of characters and their environment (that includes natural phenomena). I am also interested in Rendering, Color Science and Computational Aesthetics.

Signing Avatars

Motion Analysis and Synthesis from Captured Data

Modeling

Character Animation

Natural Phenomena

Perceptually based Algorithms

Color Science & Computational Aesthetics

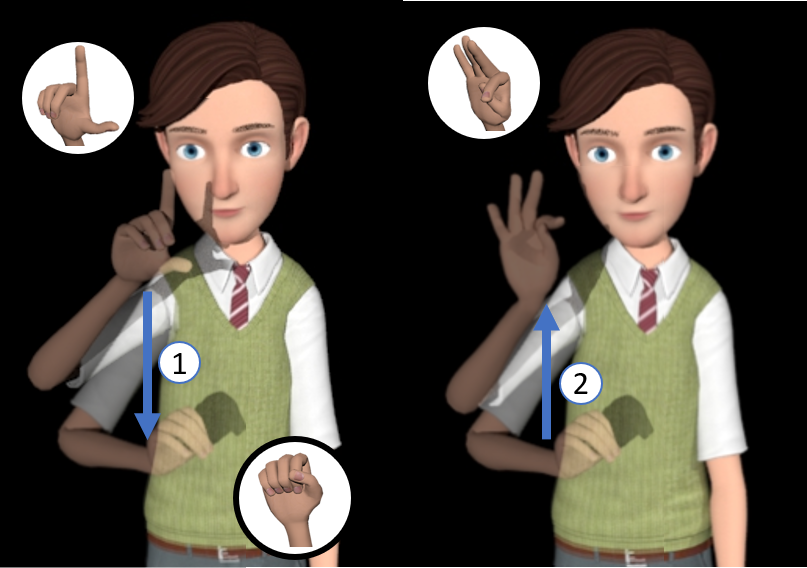

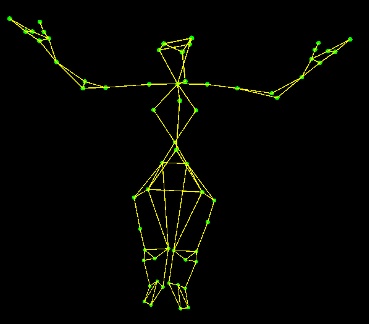

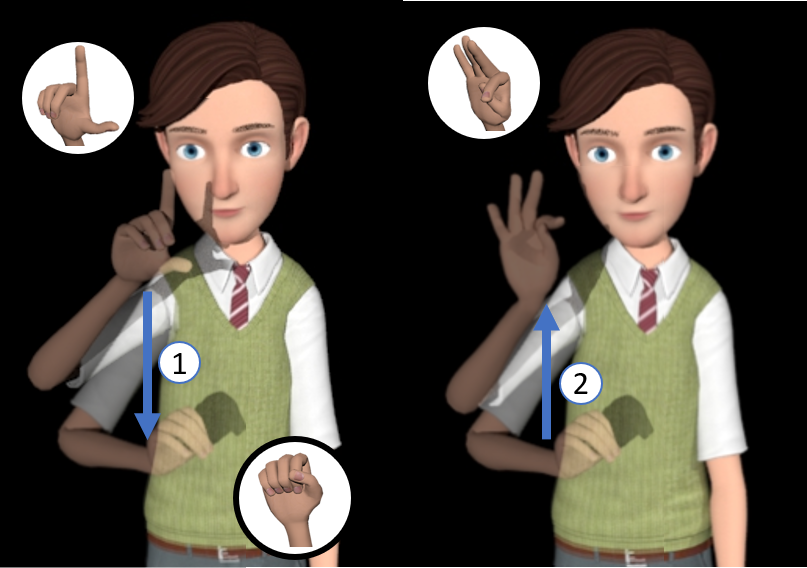

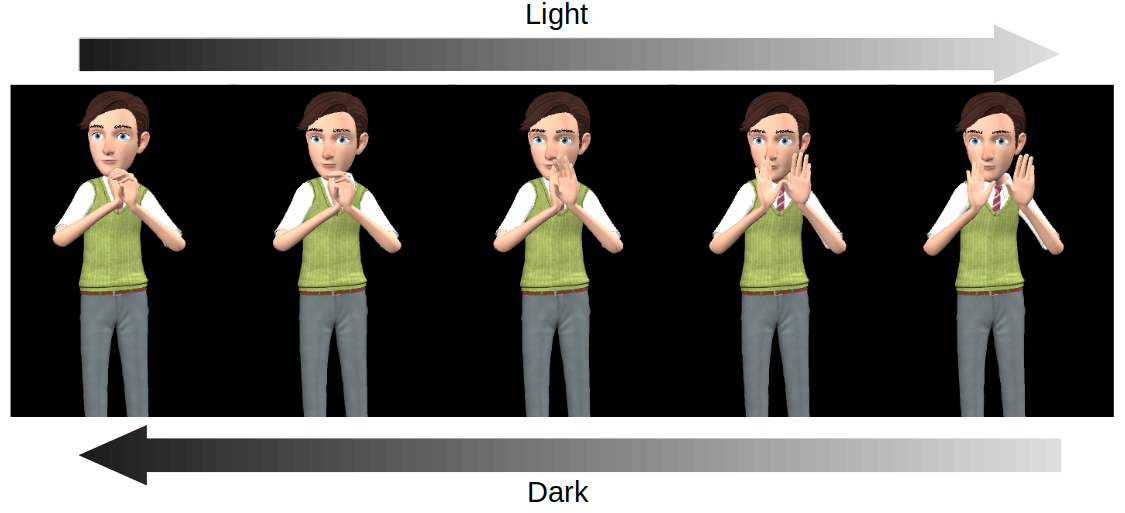

Signing Avatars

|

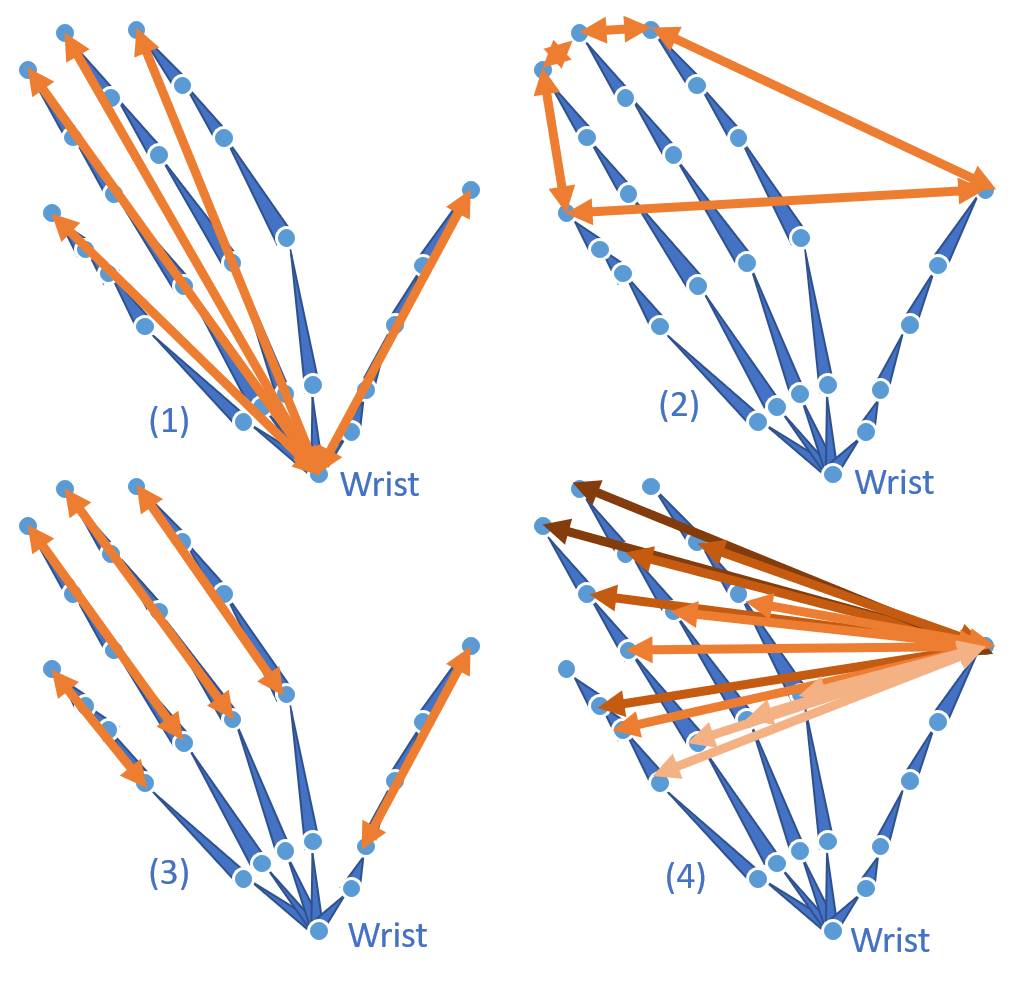

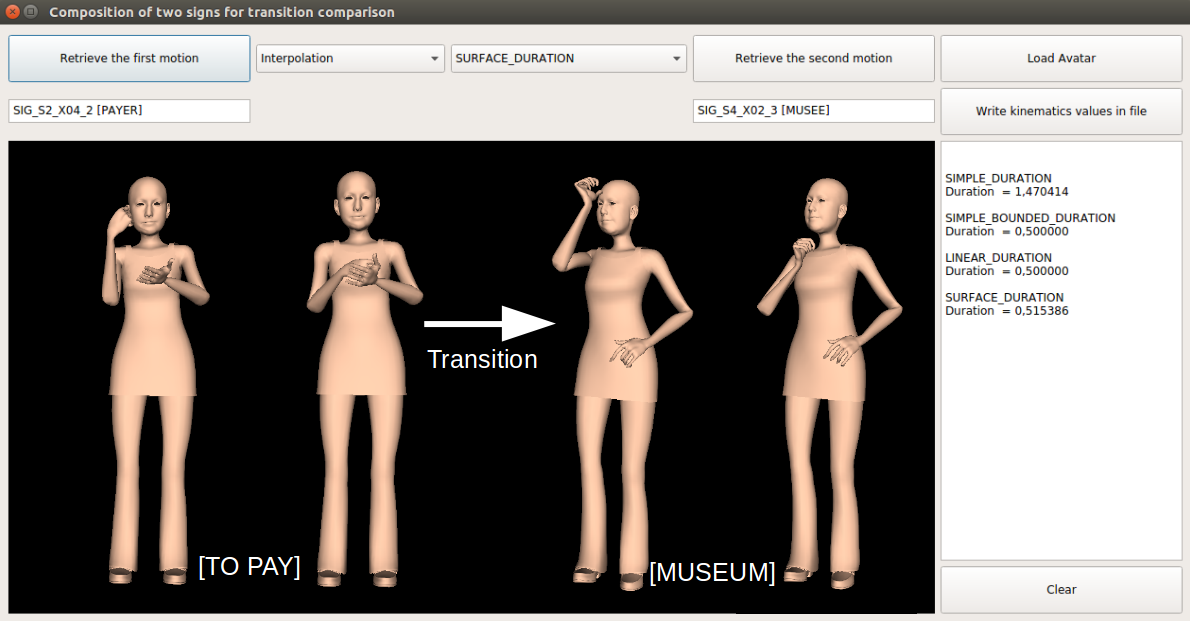

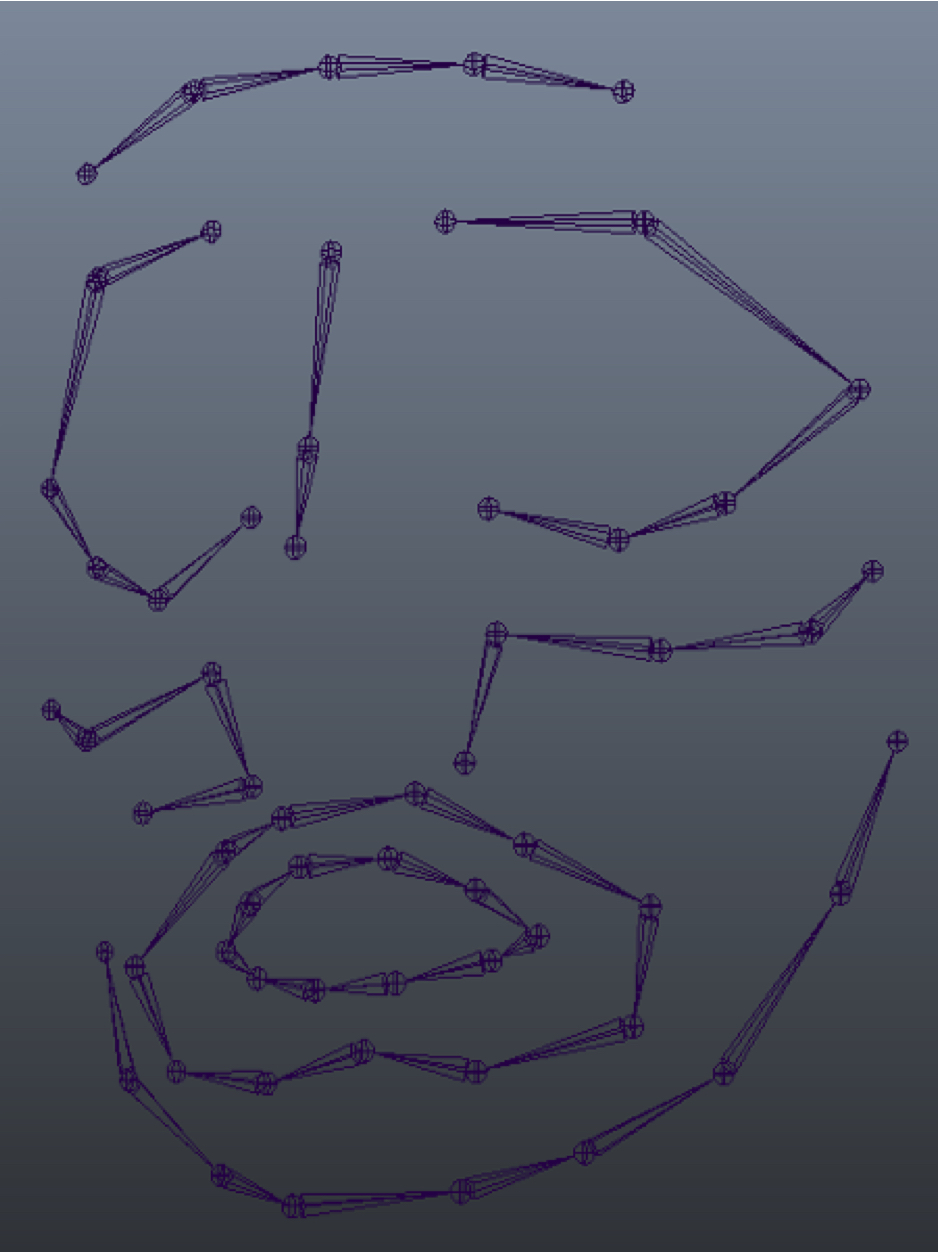

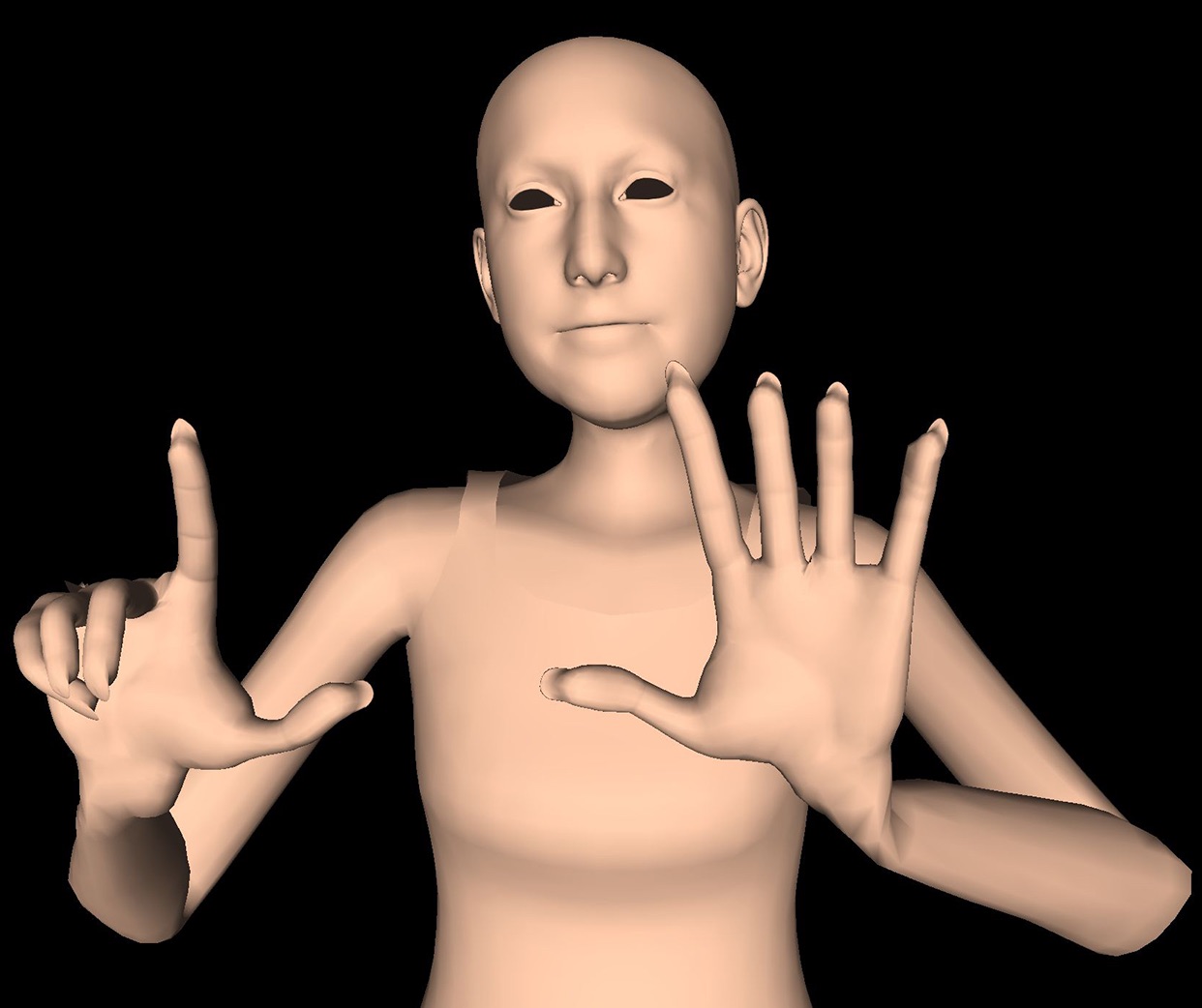

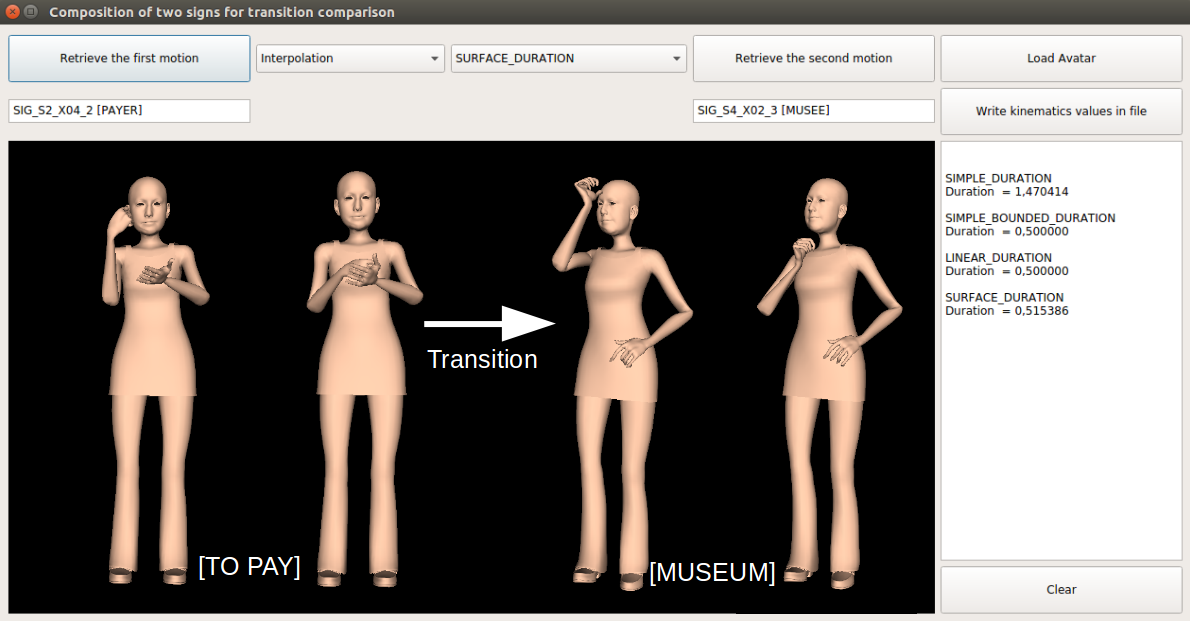

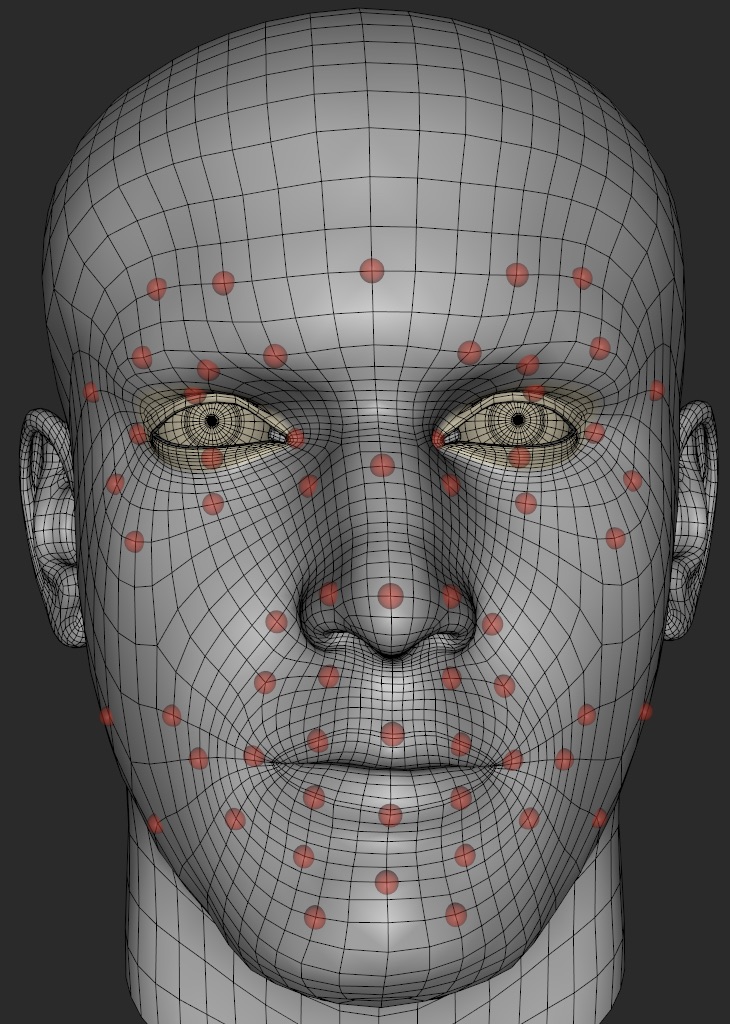

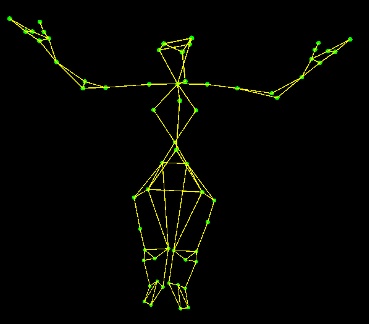

In the Signing Avatar Project we aim at combining avatar technology and linguistics to bridge the communication gap between the deaf and hearing people. Our goal is to develop an expressive digital French-to-LSF translator. We use Motion Capture Technology, including facial, hand and body data to synthesize more natural movements. We use concatenative synthesis for building new phrases in LSF from pre-recorded motion.

Related Publications

General

L. Naert, C. Larboulette and S. Gibet, A Survey on the Animation of Signing Avatars: From Sign Representation to Utterance Synthesis, Computers \& Graphics, Volume 92, page 76--98 - nov 2020. Publication page.

L. Naert, C. Larboulette and S. Gibet, LSF-ANIMAL: A Motion Capture Corpus in French Sign Language Designed for the Animation of Signing Avatars, in Proceedings of the Language Resources and Evaluation Conference, 2020. Publication page.

C. Larboulette and S. Gibet, Avatar signeurs : que peuvent-ils nous apprendre ?, in Procceedings of J.Enaction 2018 : Journée sur l'énaction en animation, simulation et réalité virtuelle, Nov 2018. Publication page.

L. Naert, C. Reverdy, C. Larboulette and S. Gibet, Per Channel Automatic Annotation of Sign Language Motion Capture Data, in Proceedings of the 8th Workshop on the Representation and Processing of Sign Languages: Involving the Language Community, LREC, May 2018. Publication page.

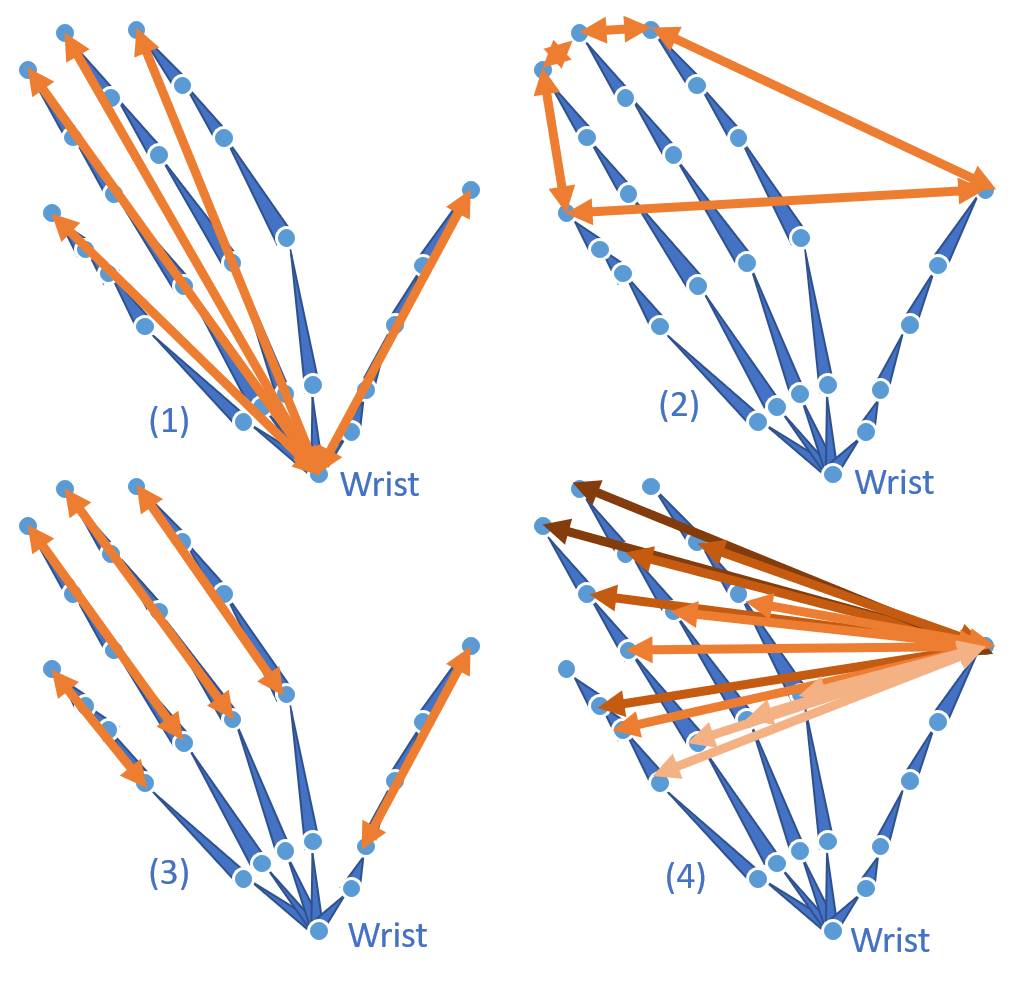

Hands

L. Naert, C. Larboulette and S. Gibet, Motion synthesis and editing for the generation of new sign language content : Building new signs with phonological recombination, Machine Translation, Volume 35, page 405--430 - jun 2021. Publication page.

L. Naert, C. Larboulette and S. Gibet, Annotation automatique des configurations manuelles de la Langue des Signes Française à partir de données capturées, in Proceedings of JFIG, Oct 2017. Publication page.

L. Naert, C. Larboulette and S. Gibet, Coarticulation Analysis for Sign Language Synthesis, in Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Jul 2017. Publication page.

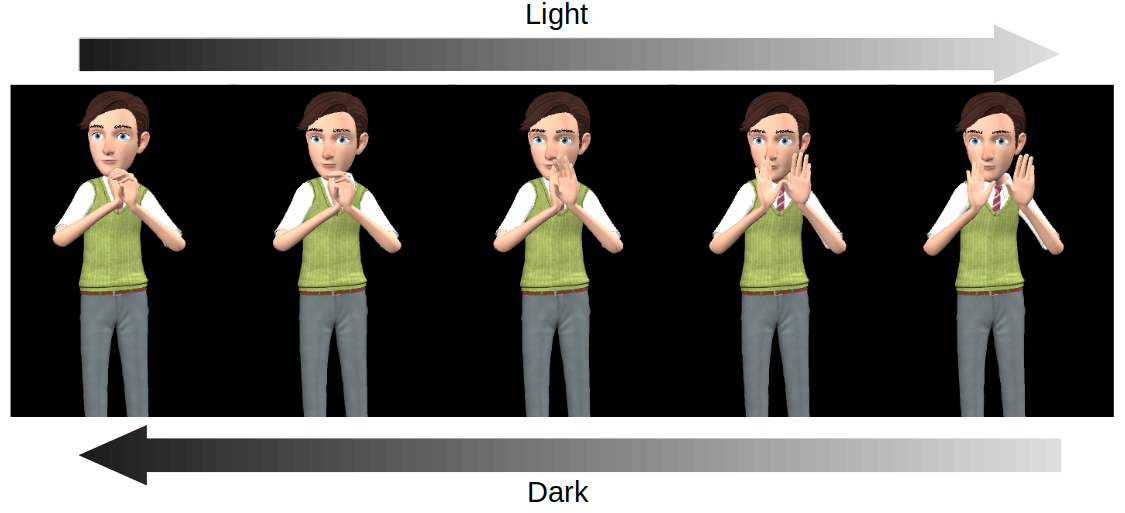

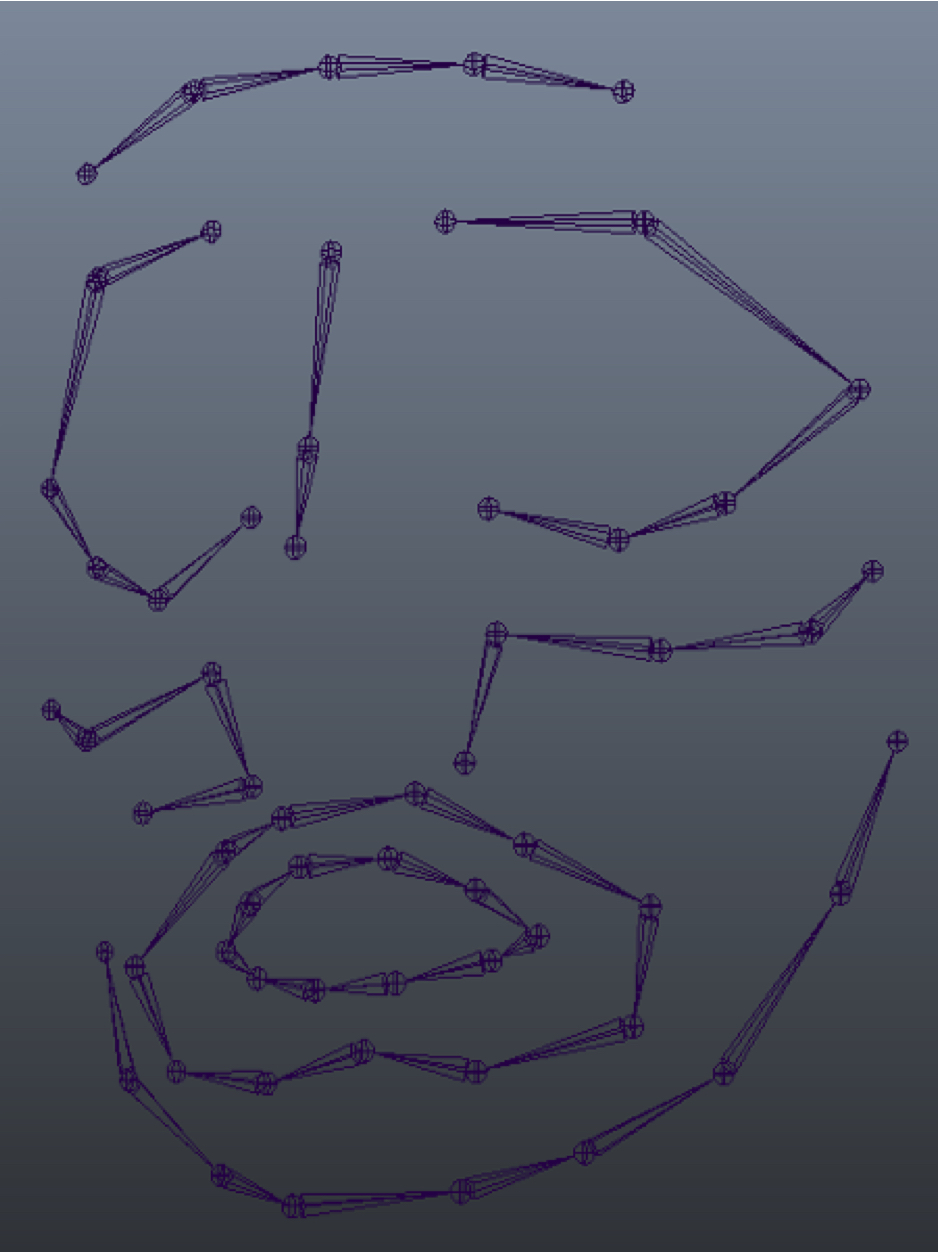

Facial Expressions

C. Reverdy, S. Gibet, C. Larboulette and P.-F. Marteau, Un système de synthèse et d’annotation automatique à partir de données capturées pour l’animation faciale expressive en LSF, in Proceedings of JFIG, Nov 2016. Publication page.

C. Reverdy, S. Gibet and C. Larboulette, Animation faciale basée données : un

état de l’art, in Proceedings of AFIG, Nov 2015. Publication

page.

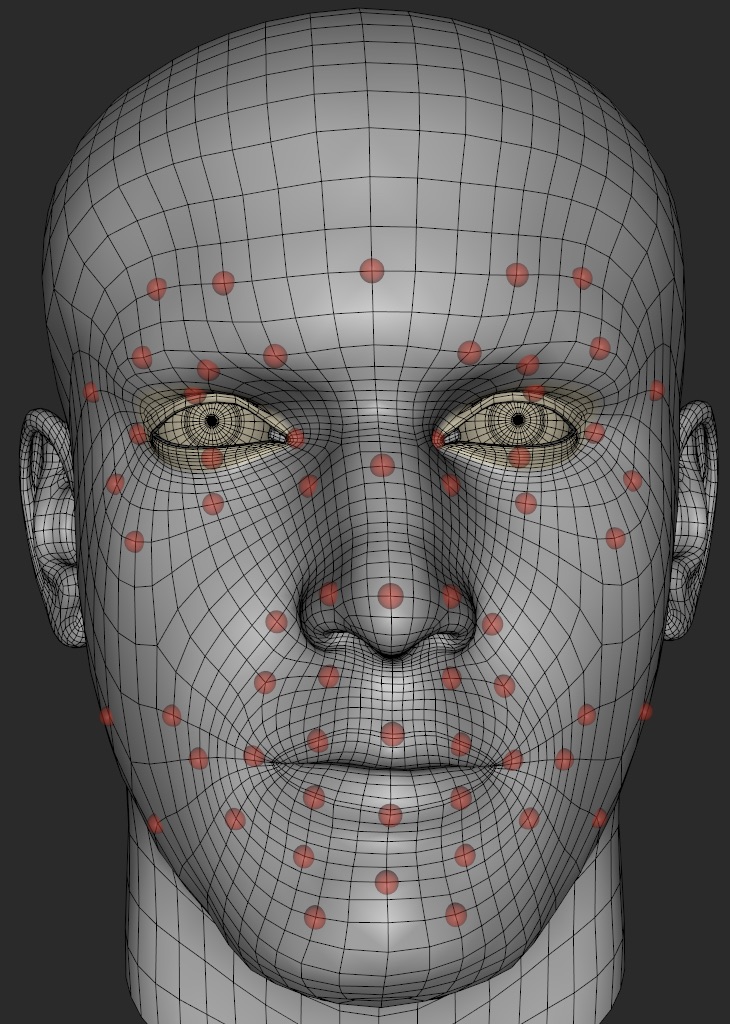

C. Reverdy, S. Gibet and C. Larboulette, Optimal Marker Set for Motion

Capture of Dynamical Facial Expressions, in Proceedings of the ACM

SIGGRAPH Conference on Motion in Games, Nov

2015. Publication

page.

|

Motion Analysis and Synthesis from Captured Data

|

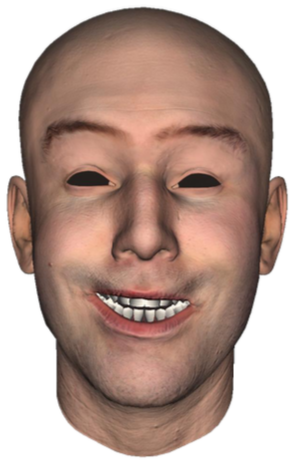

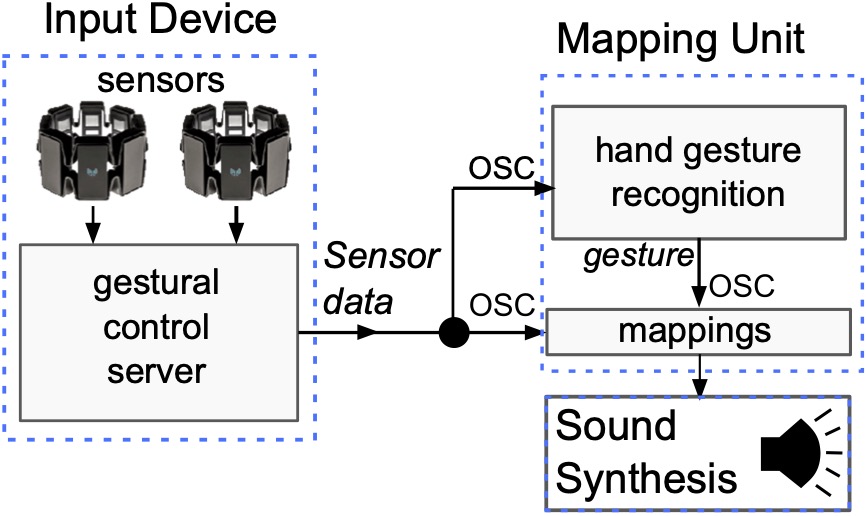

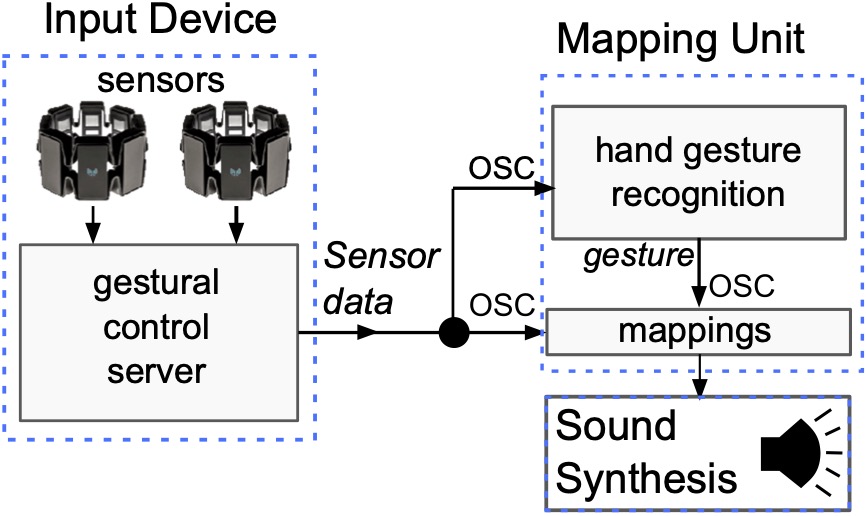

Motion descriptors can be computed from motion capture data. They range

from low-level descriptors such as positions, velocities, accelerations to

high-level descriptors such as the Effort descriptors of the Laban Movement

Analysis (LMA). Once computed, those descriptors can be used for various

applications: synthesis of motion, physically based 3D simulation, physically based sound synthesis, or motion classification and retrieval from databases.

Related Publications

T. Brizolara Da Rosa, S. Gibet and C. Larboulette, Elemental: a Gesturally Controlled System to Perform Meteorological Sounds, in Proceedings of New Interfaces for Musical Expression, Jul 2020. Publication page.

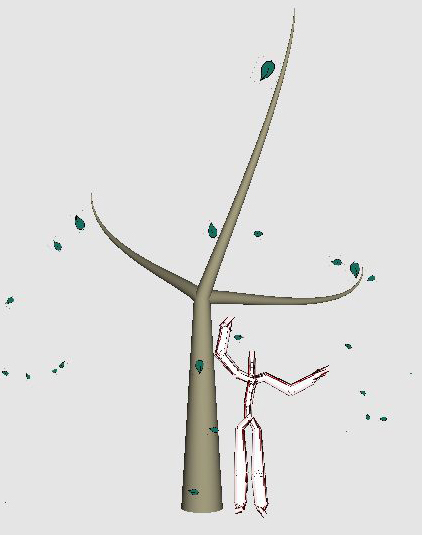

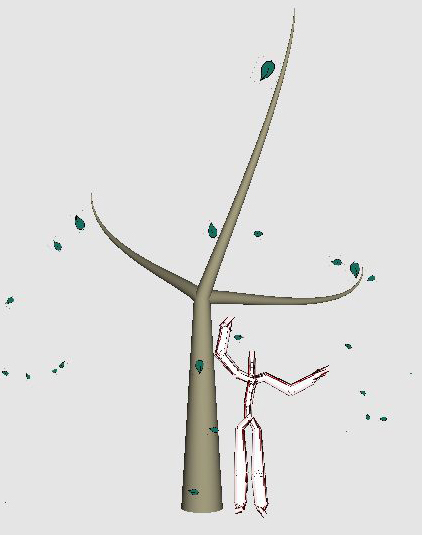

C. Larboulette and S. Gibet, I Am a Tree: Embodiment Using Physically Based Animation Driven by Expressive Descriptors of Motion, in Proceedings of the International Symposium on Movement and Computing, Jul 2016. Publication page.

C. Larboulette and S. Gibet, A Review of Computable Expressive

Descriptors of Human Motion, in Proceedings of the International Workshop on

Movement and Computing, Aug 2015. Publication page.

|

Modeling

|

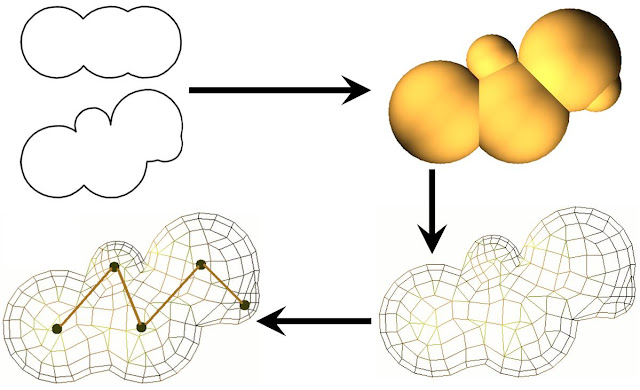

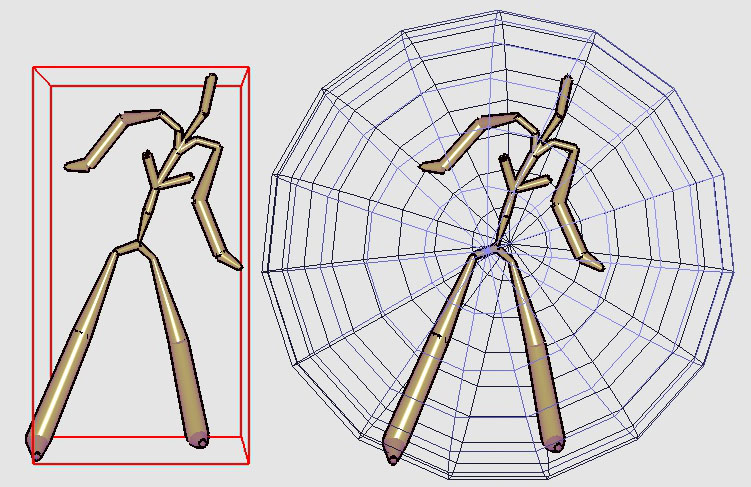

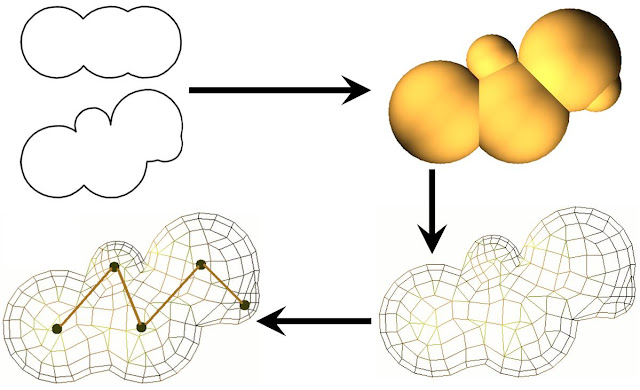

Models to be animated require polygonal meshes to be well-aligned with the silhouette of the character, are often symmetrical and mainly made of quads. Most automatic modeling techniques do not take these requirements into account. Manually created models are slow to produce, require a lot of do-undo work and a skilled artist. We thus aim at automatically producing 3D polygonal models from 2D drawings.

Related Publications

Jose Javier Macias Sanchez, Marching Quads: an Implicit Surface Poligonizer Optimized for Models to be Animated, Master thesis from Universidad Rey Juan Carlos - may 2010 Publication page.

|

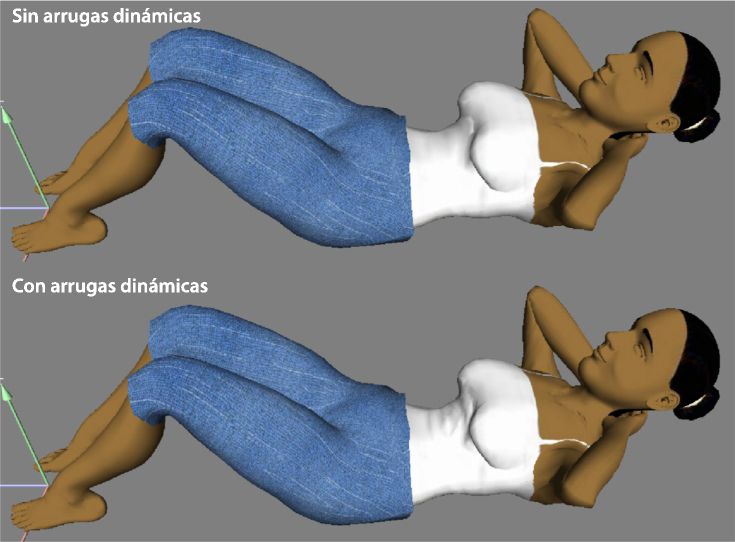

Character Animation

|

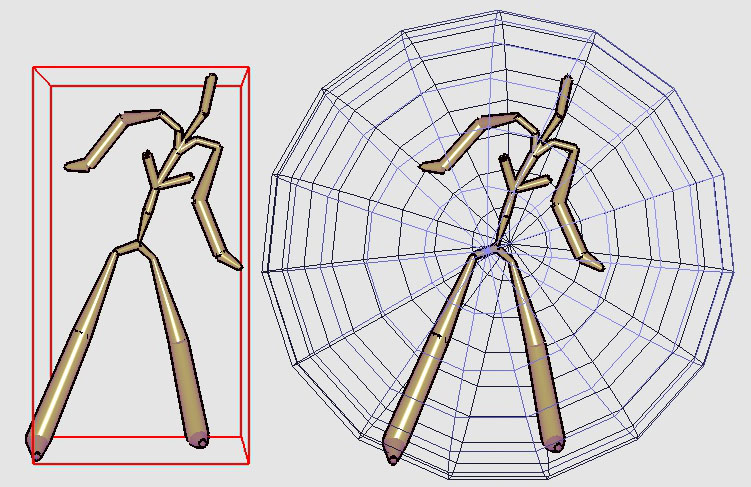

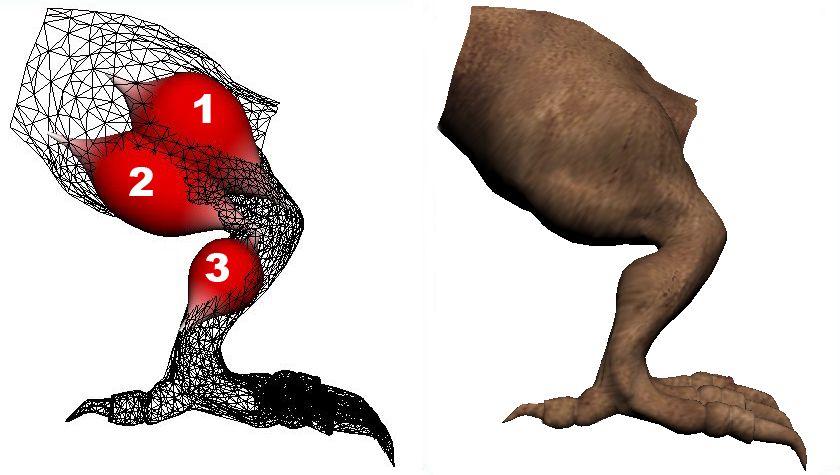

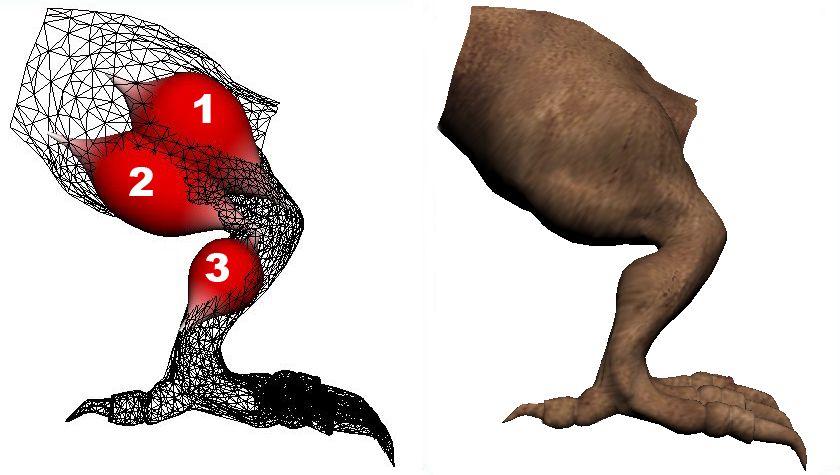

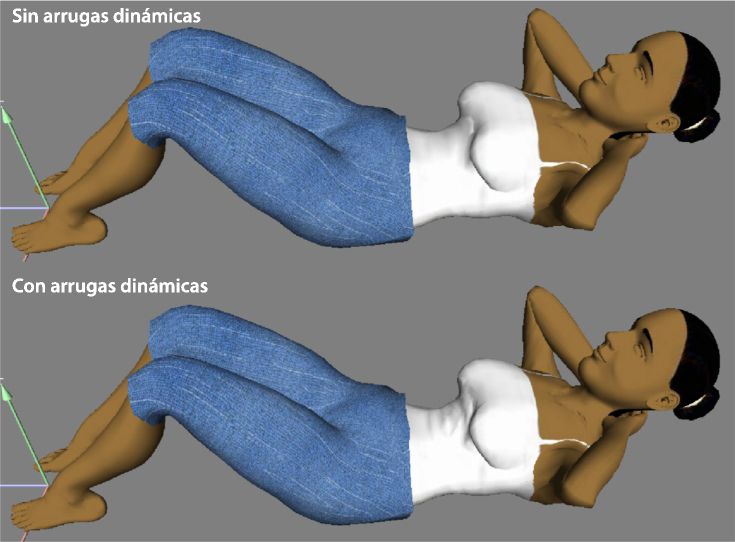

Skinning, also called Linear Blend Skinning (LBS) or Skeleton Subspace Deformations (SSD) is the standard technique for character animation to date. The character is composed of an internal skeleton and a polygonal mesh tied together through a rigging process. Although very efficient, this technique suffers several drawbacks including the well-known collapsing joint and candy wrapper effects. In addition, due to the nature of the algorithm effect itself it is not directly possible to simulate muscle bulging, dynamics or dynamic wrinkles of skin or clothes. Our aim is to enhance existing real-time character animations based on the skinning techniques with muscles, dynamics and wrinkles to make them visually more appealing.

Related Publications

Muscles

J. Ramos and C. Larboulette, A Muscle Model for Enhanced Character Skinning, Journal of WSCG, Volume 21, Number 2, pages 107-116, Jul. 2013. Publication page.

J. Ramos and C. Larboulette, Real-Time Anatomically Based Character

Animation, Posters & Demos, ACM SIGGRAPH Symposium on Interactive 3D Graphics

and Games 09, Boston, MA, Feb. 09. Publication page.

Dynamics

C. Larboulette, M.-P. Cani and B. Arnaldi, Ajout de détails dynamiques a une animation temps-réel de personnage, Adding Dynamic Details to a Real-Time Character Animation, Technique et Science Informatiques, volume 6, Nov. 06. Publication page.

C. Larboulette, M.-P. Cani and B. Arnaldi, Dynamic Skinning: Adding Real-time

Dynamic Effects to an Existing Character Animation, in Proceedings of the

Spring Conference on Computer Graphics '05, Budmerice, Slovakia, May 05. Publication page.

C. Larboulette, Traitement temps-réel des déformations de la peau et des tissus

sous-cutanés pour l'animation de personnages, Real-Time Processing of the

Deformations of the Skin and Sub-cutaneous Tissues for Character Animation,

Ph.D. Thesis from INSA de Rennes, France, Nov. 04. Publication page.

Wrinkles

C. Larboulette, M.-P. Cani and B. Arnaldi, Ajout de détails dynamiques a une animation temps-réel de personnage, Adding Dynamic Details to a Real-Time Character Animation, Technique et Science Informatiques, volume 6, Nov. 06. Publication page.

C. Larboulette and M.-P. Cani, Real-Time Dynamic Wrinkles, in Proceedings of

Computer Graphics International '04, Crete, Greece, Jun. 04. Publication page.

C. Larboulette, M.-P. Cani and B. Arnaldi, Ajout de détails a une animation de

personnage existante: cas des plis dynamiques, Adding Details to an

Existing Character Animation : Case of the Dynamic Wrinkles, in Proceedings

of the Journées Francophones d'Informatique Graphique (AFIG'04), Poitiers,

France, Nov. 04. Publication page.

C. Larboulette, Traitement temps-réel des déformations de la peau et des tissus

sous-cutanés pour l'animation de personnages, Real-Time Processing of the

Deformations of the Skin and Sub-cutaneous Tissues for Character Animation,

Ph.D. Thesis from INSA de Rennes, France, Nov. 04. Publication page.

|

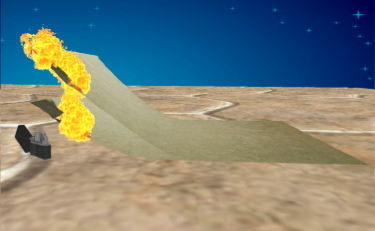

Natural Phenomena

|

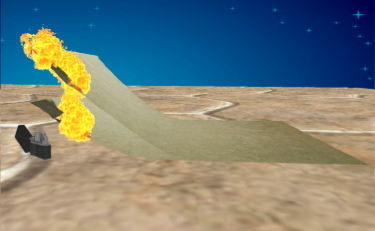

Characters necessarily evolve in an environment and interact with objects. For example, fish swimming in the sea interact with anemones in different ways depending on the type of fish. The anemones themselves sway in the water in a similar way that trees sway in the wind and react to the fluid surrounding them. Fire burns things such as paper that reacts in crumpling when it looses water and propagates heat as it burns.

Related Publications

Sea Anemones and Seaweeds

J. Aliaga and C. Larboulette, Physically Based Animation of Sea Anemones in Real-Time, in

Proceedings of the 25th Spring Conference on Computer Graphics, ACM SIGGRAPH, Budmerice,

Slovakia, Apr. 09. Publication page.

Paper Burning and Crumpling

Caroline Larboulette, Pablo Quesada Barriuso and Olivier Dumas, Burning Paper: Simulation at the Fiber's Level, in Proceedings of the ACM SIGGRAPH Conference on Motion in Games, Dublin, Ireland, Nov. 13. Publication page.

Pablo Quesada Barriuso, Burning Paper: Simulation at the Fiber's Level, Master thesis from Universidad Rey Juan Carlos - jul 2010. Publication page.

|

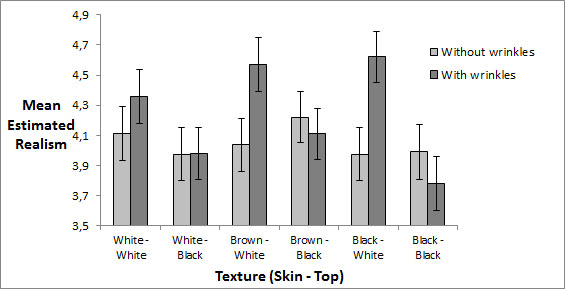

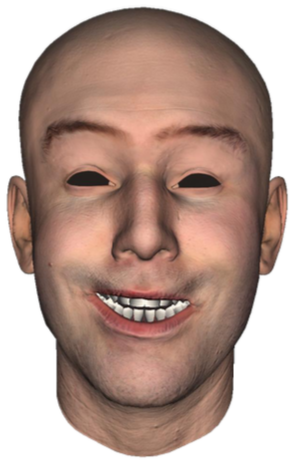

Perceptually based Algorithms

|

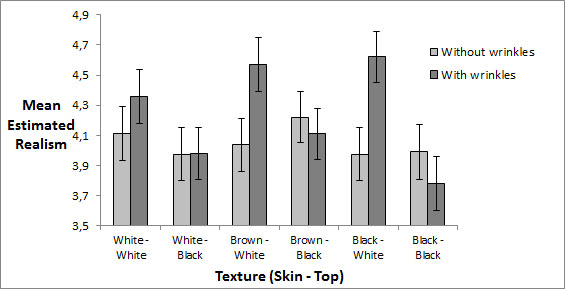

More accurate does not mean perceived more realistic. The progress in character animation and rendering that has been made in the last twenty years has brought us to the uncanny valley. To climb out of there, the current trend is to include perception into the rending/animation algorithms. To derive new perceptually based algorithms, perceptual studies are conducted to find out what effects are perceived by the human eye/brain, and in which conditions.

Perceptual studies are also developed to analyse expressiveness, meaning and style of human motion, and to classify motion captured data.

Related Publications

P. Carreno-Medrano, S. Gibet, C. Larboulette and P.-F. Marteau, Corpus Creation and Perceptual Evaluation of Expressive Theatrical Gestures, in Proceedings of the International Conference on Intelligent Virtual Agents, Boston, MA, Aug 2014. Publication page.

J. Alcon, D. Travieso and C. Larboulette, Influence of Dynamic Wrinkles on the Perceived Realism of Real-Time Character Animation, in Proceedings of the International Conference on Computer Graphics, Visualization and Computer Vision (WSCG), pages 95-103, Jun. 2013. Publication page.

Francisco Javier Alcon Palazon, A Study of the Influence of Dynamic Wrinkles on the Perceived Realism of Real-Time Character Animations, Master thesis from Universidad Rey Juan Carlos, Jul. 2010. Publication page.

|

Art, Color Science & Computational Aesthetics

|

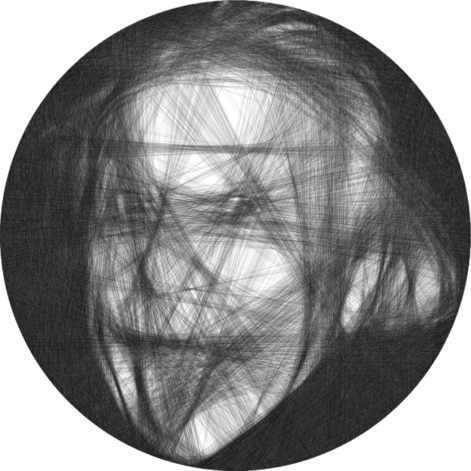

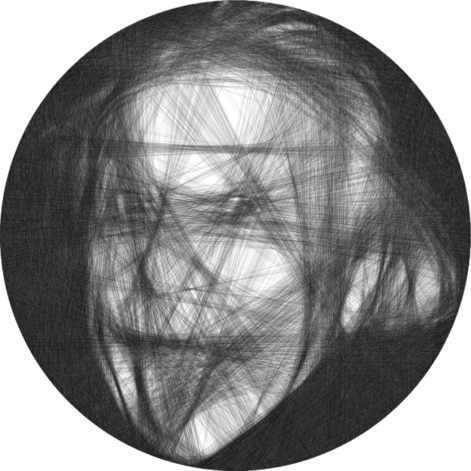

Computational Art aims at creating images using simulation algorithms or as a non-photorealistic rendering of an input image. Computational String Art is an example of non-photorealistic rendering of an input black and white photograph. Creating realistic images is one thing, creating aesthetic ones is another. Two of the important factors of aesthetics are shape and color and it is possible to derive objective metrics (aesthetics is not entirely subjective, altough it is common belief). There are thus measures of color aesthetics that have been incorporated into Color Order Systems such as the Munsell COS or Coloroid. Two questions we've adressed are how to produce and classify fluorescent colors (that change depending on the illuminant) and how to use COS to create aesthetically pleasing images ?

Related Publications

B. Demoussel, C. Larboulette and R. Dattatreya, A Greedy Algorithm for Generative String Art, in Proceedings of Bridges 2022: Mathematics, Art, Music, Architecture, Culture, Helsinki, Finland, Aug 2022. Publication page.

C. Larboulette, Celtic Knots Colorization based on Color Harmony Principles, in Proceedings of

the International Symposium on Computational Aesthetics '07, Banff, Canada, Jun. 07. Publication page.

A. Wilkie, A. Weidlich, C. Larboulette and W. Purgathofer, A Reflectance Model for Diffuse

Fluorescent Surfaces, in Proceedings of GRAPHITE '06, Kuala Lumpur, Malaysia, Nov. 06. Publication page.

A. Wilkie, C. Larboulette and W. Purgathofer, Spectral Colour Order Systems and Appearance

Metrics for Fluorescent Solid Colours, in Proceedings of the Eurographics Workshop on

Computational Aesthetics '05, Girona, Spain, May 05. Publication page.

|

|