Two papers to be published in TMLR journal

Two papers were recentmy accepted to the new journal Transation on Machine Learning Research Efficient Gradient Flows in Sliced-Wasserstein Space Arxiv Link Time Series Alignment with Global Invariances Arxiv Link

Two papers accepted at Neurips 2022 (with one oral) !

Two paper wills be featured in the Neurips 2022 program ! Congratulations to main authors (among others, Cédric Vincent-Cuaz, Huy Tran and Alexis Thual), and amazing collaborators ! Template based Graph Neural Network with Optimal Transport Distances Arxiv Link Aligning individual brains with Fused Unbalanced Gromov-Wasserstein Arxiv Link

One paper in ICLR 2022

One paper will be featured in the ICLR 2022 program on semi Relaxed Gromov-Wasserstein and its applications (work from Cédric Vincent-Cuaz) Semi-relaxed Gromov-Wasserstein divergence with applications on graphs Arxiv Link

Paper Accepted in IEEE Transactions on PAMI

Our paper on Wasserstein Adversarial Regularization was accepted at IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI) Wasserstein Adversarial Regularization for learning with label noise Arxiv Link

2 new publications at ICML 2021

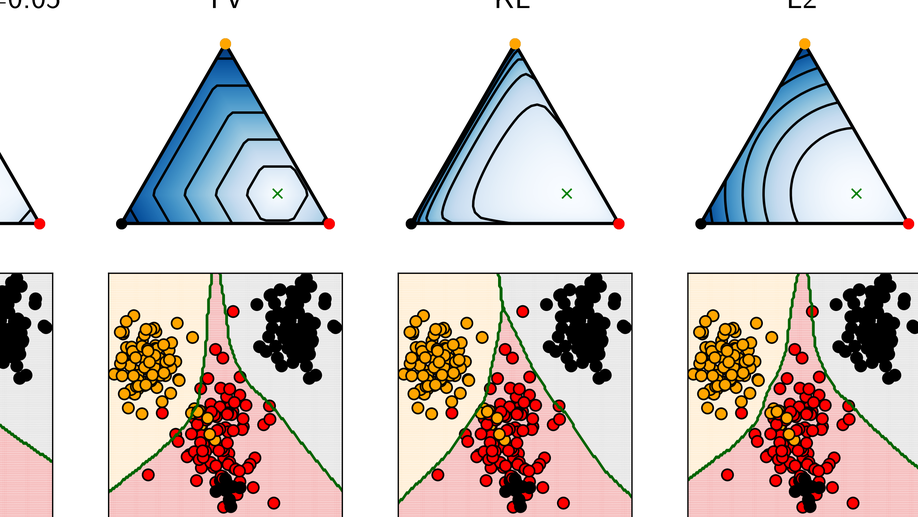

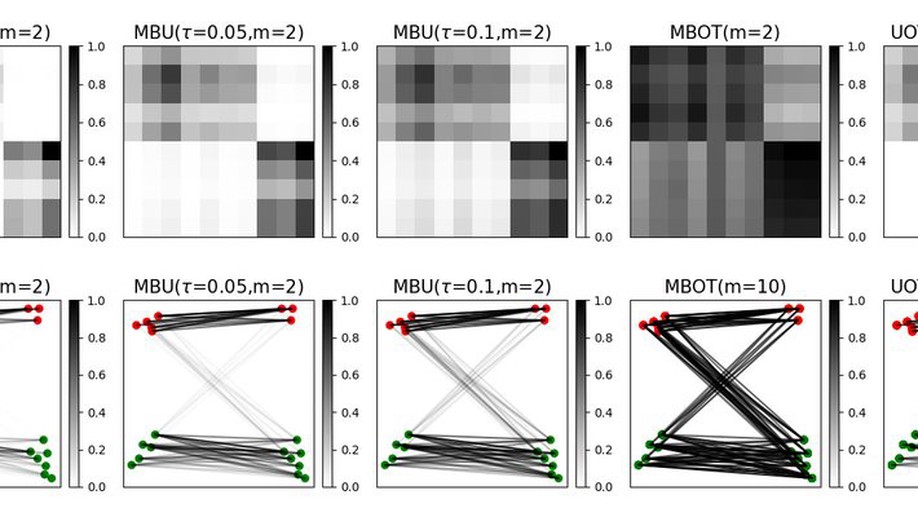

Two of our papers have made it to ICML 21 ! Unbalanced minibatch Optimal Transport; applications to Domain Adaptation Arxiv Link Online Graph Dictionary Learning" Arxiv Link

POT has made it to JMLR

POT (The Python Optimal Transport toolbox) is a fantastic library for coding optimal transport problems in Python. The associated technical publication has been published in the Software Track of JMLR. Please check [ the POT website].

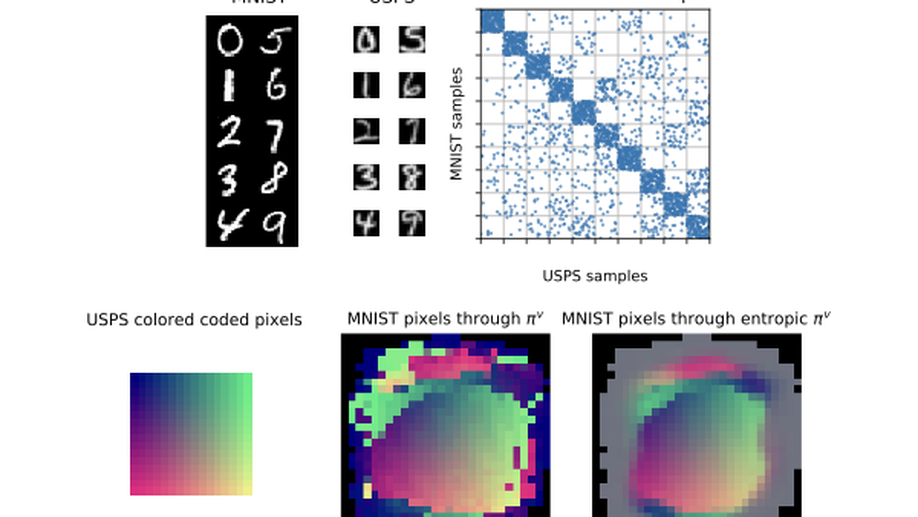

New publication at Neurips 2020 : Co-Optimal Transport

Optimal transport (OT) is a powerful geometric and probabilistic tool for finding correspondences and measuring similarity between two distributions. Yet, its original formulation relies on the existence of a cost function between the samples of the two distributions, which makes it impractical for comparing data distributions supported on different topological spaces.

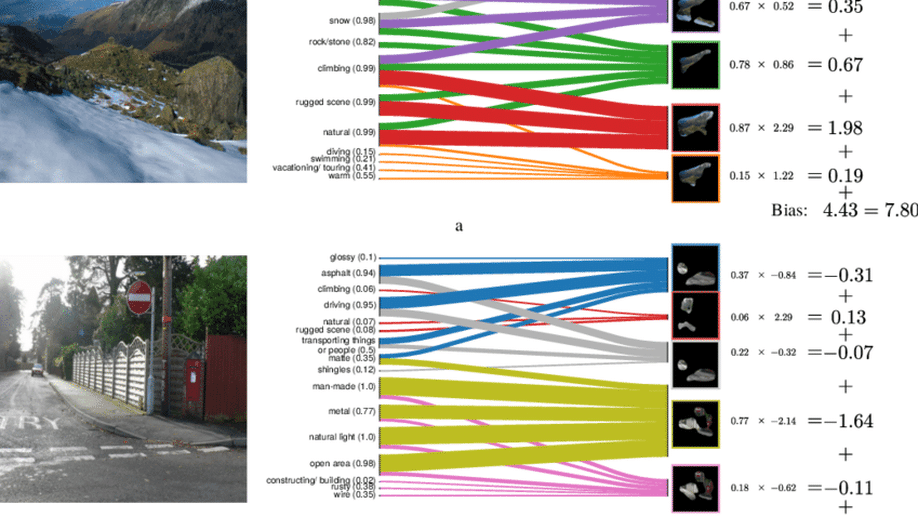

One paper accepted at ACCV 2020

One paper accepted at ACCV 2020 on Contextual Semantic Interpretability, with Diego Marcos, Ruth Fong, Sylvain Lobry, Rémi Flamary and Devis Tuia. Paper abstract: Convolutional neural networks (CNN) are known to learn an image representation that captures concepts relevant to the task, but do so in an implicit way that hampers model interpretability.

Our paper on Fused Gromov-Wasserstein published in Algorithms

Our in-depth paper on the Fused-Gromov Wasserstein is published in a special issue of MDPI Algorithms on graphs. And it made the cover ! Check the link to the special issue

One paper accepted at AISTATS 2020 in (virtual) Kyoto

The paper ‘Learning with minibatch Wasserstein : asymptotic and gradient properties’ from my Phd student Kilian Fatras is featured in the accepted of AISTATS 2020 (joint work with Younes Zine, Rémi Flamary and Rémi Gribonval).