Olivier Le Meur

Contact Information

|

Email:

Address: IRISA Campus Universitaire de Beaulieu 35042 Rennes Cedex - FRANCE |

|

Team leader of PERCEPT team

HERE

What's new?

- 2020: A new project accepted on the Affinity Therapy for ASD people! More information coming soon ! NEW !

- 2020: One paper accepted in Drones with Anne-Flore Perrin EyeTrackUAV2: a Large-Scale Binocular Eye-Tracking Dataset for UAV Videos [here] NEW !

- 2020: One paper accepted in IEEE VR with Florian Berton Eye-Gaze Activity in Crowds: Impact of Virtual Reality and Density [here] NEW !

- 2020: One paper accepted in ETRA with Pierre-Adrien Fons Predicting image influence on visual saliency distribution: the focal and ambient dichotomy [here] NEW !

- 2019: Tutorial IEEE VR 2019 Eye-tracking in 360: Methods, Challenges, and Opportunities [Here]

- 2019: Tutorial VISIGRAPP 2019 A guided tour of computational modelling of visual attention [Here]

- 2019: Paper accepted in Multimedia Tools and Applications. Liu et al., Saliency-aware inter-image color transfer for image manipulation

- 2018: Paper accepted at ETRA 2018 [Here]

- 2017: special issue on Visual Information Processing for Virtual Reality, Journal of Visual Communication and Image Representation [call]

- 2017: One paper in IEEE TIP (Visual attention saccadic models learn to emulate gaze patterns from childhood to adulthood)

- 2017: BEST PAPER AWARD for the paper published in CGI 2017 (High-Dynamic-Range Image Recovery from Flash and Non-Flash Image Pairs)

- 2017: One paper in ACM TOM (Creating Segments and Effects on Comics by Clustering Gaze Data)

- 2017: One paper in IEEE TIP (Depth-aware salient object detection and segmentation via multiscale discriminative saliency fusion and bootstrap learning)

- 2016: Tutorial Eurographics [mySlides], [web site].

- 2016: One paper in Vision Research (Introducing context-dependent and spatially-variant viewing biases in saccadic models) [Here]

- 2015: One paper in Vision Research (Saccadic model of eye movements for free-viewing condition) [Here].

- 2015: One paper accepted in IEEE TIP (Video inpainting with short-term windows: application to object removal and error concealment) [Here]

- 2014: One paper accepted at ACCV 2014 (Saliency aggregation: Does unity make strength?)

- 2014: One paper accepted in IEEE TCSVT (Superpixel-Based Spatiotemporal Saliency Detection)

- 2014: One paper accepted in IEEE TIP (Saliency Tree: A Novel Saliency Detection Framework)

- 2014: Our Review on inpainting is now available on IEEE signal processing magazine 2014

- 2013: Supplementary materials SPIE HVEI'13 paper (How visual attention is modified by disparities and textures changes?) ([Here])

- 2013: One paper accepted in IEEE TIP (Hierarchical super-resolution-based inpainting) [Here]

- 2013: One paper accepted at ICIP'13 (Memorability of natural scene: the role of attention) [Here]

- 2013: One paper accepted in Optics Letters (Saliency detection using regional histograms [pdf])

- 2012: We release the first version (V1.0) of BRM's software: visual fixation analysis here

- 2012:One paper accepted in BRM journal (Similarity metrics for assessing the performance of computational models of visual attention) here

- 2012: One paper accepted at ECCV 2012 (Super-resolution-based inpainting, demo Here)

- 2012: Special session on visual attention SPIE 2012 here

- 2012: Video inpainting: one paper accepted at ICIP 2012

- 2012: Dynamic saliency map for 3D content: one paper accepted in Cognitive Computation journal 2012

- 2011: Focal vs ambiant fixations: one paper accepted in iPerception journal 2011

- 2012: Visual dispersion between observers: one paper accepted at ACM Multimedia 2011 (long paper)

Research Areas

-

Visual attention understanding and modelling: 3 research axis

- Computational modelling of the visual attention:

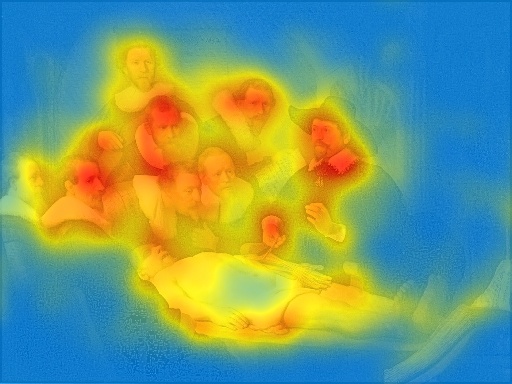

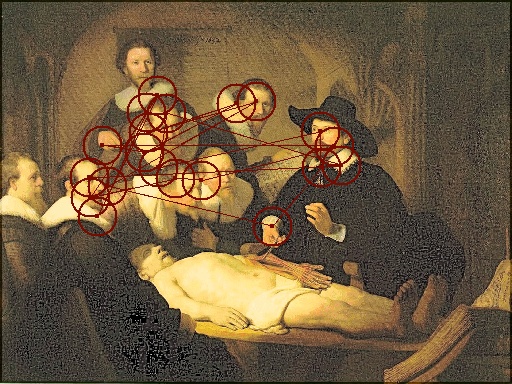

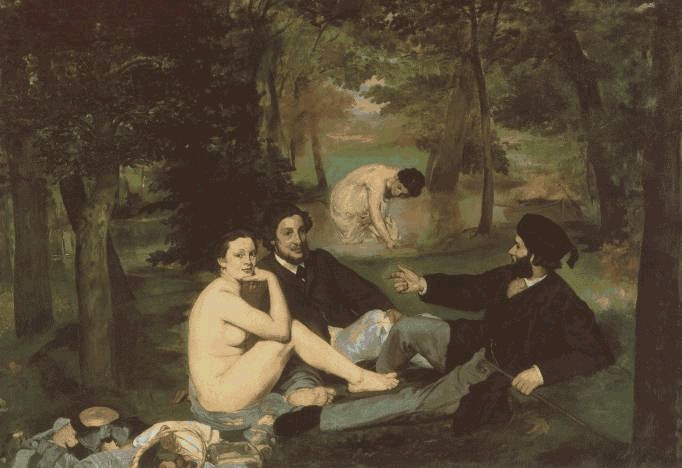

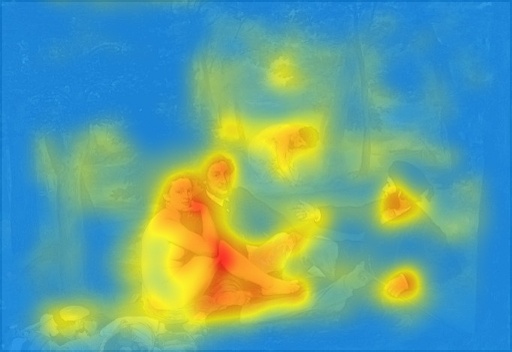

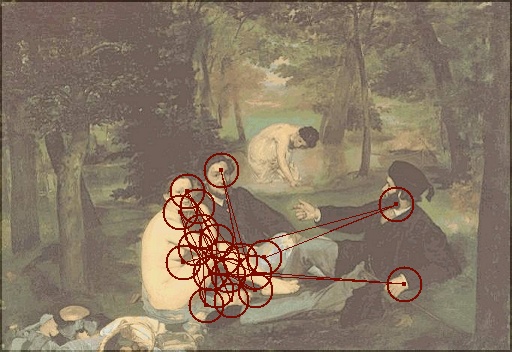

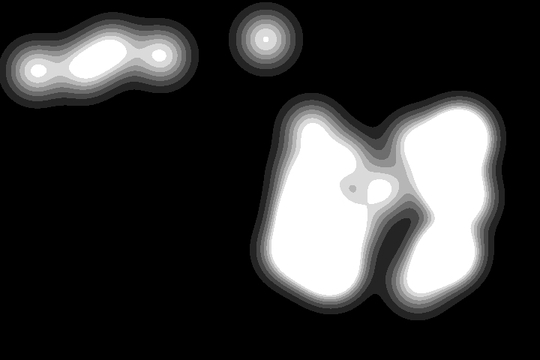

From left to right: original picture, heat map and visual scan path (20 first fixation points).

Leçon d'anatomie, Rembrandt

Déjeuner sur l'herbe, Manet

eye tracking data set: here

eye tracking data set: here

special session ICIP'09, SPIE'2012 : here

special session ICIP'09, SPIE'2012 : here

- Relationship between visual fixations and scene context:

Would it be possible to categorize fixations as attentional fixations, semantic fixations...?

Is there a particular pattern of gaze deployment for a given scene?

- Examining the gaze deployment under a quality assessment task: application to quality metric.

A. Ninassi, From local perception of coding distortions to the overall visual quality evaluation of images and video. Contribution of visual attention in visual quality assessment, PhD thesis, university of Nantes, 2009.

- Do video coding impairments disturb the visual attention deployment?, Elsevier, Signal Processing: Image Communication, 2010. [IF=0.836]

From left to right: Original, degraded pictures and saliency map (Click to get the full resolution picture.) [Project page] NEW !

- Overt visual attention for free-viewing and quality assessment tasks. Impact of the regions of interest on a video quality metric., accepted in Elsevier, Signal Processing: Image Communication. [IF=0.836]

- Computational modelling of the visual attention:

-

Saliency-based applications:

- Perceptual/selective compression: some details in O. Le Meur et al. Selective H.264 video coding based on a saliency map, Not published, 2005. [pdf]

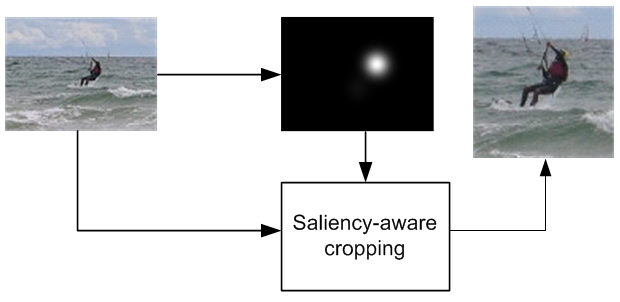

- Content-aware image cropping:

The image is cropped based on a saliency map. The basic scheme, see below, illustrates the main idea:

More details in:

O. Le Meur, X. Castellan, P. Le Callet, D. Barba, Efficient saliency-based repurposing method, ICIP, 2006. [pdf] [poster]

C. Chamaret and O. Le Meur, Attention-based video reframing: validation using eye-tracking, ICPR, 2008. [pdf] - Data-pruning based on saliency: Y. Andam, Master Thesis 2010. Below, an example based on Weickert's study. From the edges of a picture, the picture is reconstructed by using a diffusion scheme:

Teaching

Lecture

ESIR-IFSIC: here- ESIR 3 (2009-2014)

- Introduction to information theory:

- Tools for data compression (DIIC 3 INC and LSI [pdf] (last update September 2011))

- Pictures coding (JPEG and JPEG2000) (DIIC 3 INC [pdf] (last update 18/11/09))

- Video compression (MPEG-2) (DIIC 3 INC [pdf] (last update 18/11/09))

- Video compression (H.264) (ESIR 3 IN [pdf] (last update 01/10/12))

- ESIR 3 CAV option:

- Lecture on 3D (perception, representation and compression) [pdf]

- Lecture on HEVC (New standard of compression) [pdf]

- Projects 2012-2014: [pdf], pratical works description [pdf].

- DIIC 3 INC tests 2009-10 [pdf]

- ESIR 2 IN ACV tests 20010-11 [pdf]

- ESIR 2 (TSI)(2010-2015):

- Image processing: Fourier transform (FT, DFT,...) (ESIR2 INC [pdf] [TD1][TD1 correction])

- Image processing: linear, non-linear filtering, image restoration, seam carving, diffusion, inpainting... (ESIR2 INC [pdf] (last update 05/12/10) 59Mo(!))

- Elements of information theory (ESIR2 INC [pdf]) (Some demonstrations [pdf])

- Compression (ESIR2 INC [pdf])

- Open house ESIR demo [here]

- Master MITIC VIS (2010-2012): here

- Introduction to image processing (transform, quantization..) [pdf]

- Image filtering [pdf]

- Compression in a nutshell... [pdf].

- DS: [DS 2010-2011]

- TPs: [tp1], [ressources], [tp2], [tp3], [ressources], [tp4], [ressources]

- Selective visual attention: from experiments to computational models [pdf] (91Mo!!!)

Examples of Pratical Works:

Examples of pratical works (seam carving, quality assessment, compression). Glance at the page [here] NEW !Publications

Complete list on:

Talk / Granted project

- Tutorial IEEE VR 2019 Eye-tracking in 360: Methods, Challenges, and Opportunities [Here]

- Tutorial VISIGRAPP 2019 A guided tour of computational modelling of visual attention [Here]

- Kepaf conference ([webPage]), Visual attention: Models, Performances and Applications, [pdf]

- GdR ISIS (2013), Visual attention: Inter-Observer Visual Congruency (IOVC) and memorability prediction, [pdf]

- Invited talks in China: Examplar-based inpainting method, [pdf] (400 Mb!)

- University of Electronic Science and Technology of China, School of Electronic Engineering, Prof. Bing ZENG and Prof. Hongliang LI;

- Sichuan University, Prof. Qionghua WANG

- Saliency-aware High-resolution Video Processing (SHIVPRO) (Marie Curie International Incoming Fellowship within the 7th European Community Framework (PF7-PEOPLE-2011-2015-IIF): [webPage]

PHD Thesis - HDR

O. Le Meur, Visual attention modelling and applications. Towards perceptual-based editing methods , Habilitation à Diriger des Recherches (HDR), University of Rennes 1, 2014. [pdf] [presentation] [slides]O. Le Meur, Attention sélective en visualisation d'images fixes et animées affichées sur écran: modèles et évaluation de performances - applications , University of Nantes, 2005. [pdf]

- Sensitivity of human visual attention to artifacts of coding: here

B. Follet, Ambient/focal visual fixations dichotomy in natural scene perception , University of Paris VIII, 2012. [Phd defense pdf]

J. Gautier, A Dynamic Visual Attention Model for 2D and 3D conditions - Depth Coding and Inpainting-based Synthesis for Multiview Videos , IRISA / University of Rennes I, 2012. [Phd thesis pdf] [Home page]

D. Khaustova, Objective assessment of stereoscopic video quality of 3DTV, , IRISA / University of Rennes I, 2014.

M. Ebdelli, Video inpainting techniques: application to object removal and error concealment, IRISA / University of Rennes I, 2014. [pdf]

N. Dhollande, Optimisation du codage HEVC par des moyens de pré-analyse et/ou de pré-codage du contenu, IRISA / University of Rennes I, 2017.

H. Hristova, Example-guided image editing, IRISA / University of Rennes I, 2017.

D. Kuzovkin, Assessment of photos in albums based on aesthetics and context, IRISA / University of Rennes I, 2019. [pdf]

M. Rousselot, Optimisation du comportement des encodeurs pour la compression d'images face aux nouveaux standards de télévision, IRISA / University of Rennes I, 2019.

Book Chapter

- M. Mancas and O. Le Meur, Applications of Saliency Models, book chapter in From Human Attention to Computational Attention, M. Mancas, V. P. Ferrera, N. Riche, J. G. Taylor, Springer Series in Cognitive and Neural Systems, 2016.

- O. Le Meur and M. Mancas, Computational models of visual attention and applications, book chapter in Biologically-inspired Computer Vision-Fundamentals and Applications, G. Cristobal, L. Perrinet and M. Keil, by Wiley VCH, [link], 2015.

- T. Baccino, O. Le Meur, B. Follet, La vision ambiante-focale dans l'observation de scènes visuelles, book chapter in A perte de vue les nouveaux paradigmes du visuel, Presse du réel, [link], 2015.

- L. Morin, O. Le Meur, C. Guillemot, V. Jantet and J. Gautier, Synthèse de vues intermédiaire Chapter in Vidéo 3D: Capture, traitement et diffusion. Lucas L., Loscos C. et Remion Y. (Editors), Hermès, 2013.

Journals

38. A.F. Perrin, V. Krassanakis, L. Zhang, V. Ricordel, M. Perreira Da Silva, O. Le Meur (2020). EyeTrackUAV2: a Large-Scale Binocular Eye-Tracking Dataset for UAV Videos. Drones, 4(1), 2.37. L. Maczyta, P. Bouthemy, O. Le Meur, CNN-based temporal detection of motion saliency in videos., Pattern Recognition Letters, Elsevier, 2019, 128, pp.298-305.

36. X. Liu, Z. Liu, Q. Jiao, O. Le Meur, W. Zhao, Saliency-aware inter-image color transfer for image manipulation, Multimedia Tools and Applications, 2019.

35. M. Rousselot, O. Le Meur, R. Cozot and X. Ducloux, Quality Assessment of HDR/WCG Images Using HDR Uniform Color Spaces, J. Imaging 2019, 5(1), 18 (This article belongs to the Special Issue Multimedia Content Analysis and Applications).

34. H. Hristova, O. Le Meur, R. Cozot and K. Bouatouch, Multi-purpose bi-local CAT-based guidance filter, accepted in Signal Processing: Image Communication, 2018.

33. L. Wu, Z. Liu, H. Song and O. Le Meur, RGBD co-saliency detection via multiple kernel boosting and fusion, Springer, Multimedia Tools and Applications, pp. 1-15, 2018.

32. H. Hristova, O. Le Meur, R. Cozot and K. Bouatouch, Transformation of the multivariate generalized Gaussian distribution for image editing, IEEE Transactions on Visualization and Computer Graphics, 2017 .

31. O. Le Meur, A. Coutrot, Z. Liu, P. Rämä, A. Le Roch, and A. Helo, Visual attention saccadic models learn to emulate gaze patterns from childhood to adulthood, IEEE Transactions on Image Processing, 2017. doi: 10.1109/TIP.2017.2722238.

30. I. Thirunarayanan, K. Khetarpal, S. Koppal, O. Le Meur, J. Shea, E. Jain, Creating Segments and Effects on Comics by Clustering Gaze Data, ACM Transactions on Multimedia Computing Communications and Applications, 13(3), 24, 2017. [preprint] [project page]

29. H. Song, Z. Liu, H. Du, G. Sun, O. Le Meur, and T. Ren, Depth-aware salient object detection and segmentation via multiscale discriminative saliency fusion and bootstrap learning, IEEE Transactions on Image Processing, 2017. doi: 10.1109/TIP.2017.2711277.

28. H. Hristova, O. Le Meur, R. Cozot and K. Bouatouch, High-Dynamic-Range Image Recovery from Flash and Non-Flash Image Pairs, The Visual Computer, 2017.

27. O. Le Meur, A. Coutrot, Z. Liu, A. Le Roch, A. Helo and P. Rama, Computational Model for Predicting Visual Fixations from Childhood to Adulthood, arXiv preprint arXiv:1702.04657 link, 2017.

26. P. Buyssens, O. Le Meur, M. Daisy, D. Tschumperlé and O. Lézoray, Depth-guided disocclusion inpainting of synthesized RGB-D images, IEEE Transactions on Image Processing, 2016 [IF=3.042].

25. O. Le Meur and A. Coutrot, Introducing context-dependent and spatially-variant viewing biases in saccadic models, Vision Research, 2016 [IF=2.381], [Presentation]. [project page] [pdf] [IF=2.381].

24. M. Ebdelli, O. Le Meur and C. Guillemot, Video inpainting with short-term windows: application to object removal and error concealment, IEEE TIP, 2015. [project page] [pdf]

23. J. Li, Z. Liu, X. Zhang, O. Le Meur and L. Shen, Spatiotemporal Saliency Detection Based on Superpixel-level Trajectory, accepted for publication in Signal Processing: Image Communication, may 2015.

22. D. Khaustova, J. Fournier and O. Le Meur, An Objective Metric for Video Quality Prediction Using Perceptual Thresholds, in SMPTE Journal, April 2015.

21. O. Le Meur and Z. Liu, Saccadic model of eye movements for free-viewing condition, Vision Research, Vol. 116, pp. 152-164, 2015 [Presentation]. [project page] [pdf] [IF=2.381].

20. Z. Liu, X. Zhang, S. Luo and O. Le Meur, Superpixel-Based Spatiotemporal Saliency Detection, IEEE TCSVT, 2014. More details in this [webPage]

19. Z. Liu, W. Zou and O. Le Meur, Saliency Tree: A Novel Saliency Detection Framework, IEEE TIP, 2014 [Single column paper]. More details in this [webPage]

18. Z. Liu, W. Zou, L. Li, L. Shen and O. Le Meur, Co-saliency detection based on hierarchical segmentation, IEEE Signal Processing Letters, vol. 21(1), 2014 [pdf] [IF=1.674].

17. C. Guillemot, O. Le Meur, Image inpainting: overview and recent advances, IEEE signal processing magazine, January 2014. [IF=3.368].

16. C. Guillemot, M. Turkan and O. Le Meur, Object removal and loss concealment using neighbor embedding methods, Elsevier Signal Processing: Image Communication, 2013.

15. O. Le Meur, M. Ebdelli and C. Guillemot, Hierarchical super-resolution-based inpainting, IEEE TIP, vol. 22(10), pp. 3779-3790, 2013.[Project page] [IF=3.042].

14. Z. Liu, O. Le Meur, S. Luo and L. Shen, Saliency detection using regional histograms, Optics Letters, Vol. 38, N°5, March 2013 [pdf] [Results] [IF=3.39].

13. O. Le Meur and T. Baccino, Methods for comparing scanpaths and saliency maps: strengths and weaknesses, Behavior Research Method, vol. 45, N°1, pp. 251-266, 2013 [IF=2.4]. [Project page]

12. J. Gautier and O. Le Meur, A time-dependent saliency model mixing center and depth bias for 2D and 3D viewing conditions, Cognitive Computation 2012, DOI: 10.1007/s12559-012-9138-3. [web page (paper)]

11. B. Follet, O. Le Meur and T. Baccino New insights on ambient and focal visual fixations using an automatic classification algorithm, i-Perception, vol. 2(6), pp. 592610 2011. [i-perception] [web page (paper)]

10. F. Urban, B. Follet, C. Chamaret, O. Le Meur and T. Baccino Medium spatial frequencies, a strong predictor of salience, Cognitive Computation: Volume 3, Issue 1, pages 37-47, 2011 DOI: 10.1007/s12559-010-9086-8 (Special issue on Saliency, Attention, Active Visual Search, and Picture Scanning). Springer Link Full text Preprint.

9. B. Follet, O. Le Meur and T. Baccino Modeling visual attention on scenes, Studia Informatica Universalis, Vol. 8, Issue 4, pp. 150-167, 2010. [pdf]

8. O. Le Meur, A. Ninassi, P. Le Callet and D. Barba Do video coding impairments disturb the visual attention deployment?, Elsevier, Signal Processing: Image Communication, vol. 25, Issue 8, pp. 597-609, September 2010. DOI information: 10.1016/j.image.2010.05.008 [preprint pdf] [IF=0.836] [Project page]

7. O. Le Meur, A. Ninassi, P. Le Callet and D. Barba Overt visual attention for free-viewing and quality assessment tasks. Impact of the regions of interest on a video quality metric, Elsevier, Signal Processing: Image Communication, vol. 25, Issue 7, pp. 547-558, August 2010. DOI information: 10.1016/j.image.2010.05.006 [preprint pdf] [IF=0.836]

6. O. Le Meur and J.C. Chevet Relevance of a feed-forward model of visual attention for goal-oriented and free-viewing tasks, IEEE Trans. On Image Processing, vol. 19, No. 11, November 2010 pp. 2801-2813. DOI information: 10.1109/TIP.2010.2052262 [preprint pdf][IF=3.315]

5. O. Le Meur, S. Cloarec and P. Guillotel Automatic content repurposing for Mobile applications, SMPTE Motion Imaging journal, January/February 2010.

4. A. Ninassi, O. Le Meur, P. Le Callet and D. Barba Considering temporal variations of spatial visual distortions in video quality assessment, IEEE Journal of Selected Topics in Signal Processing, Special Issue On Visual Media Quality Assessment, 2009. [pdf]

3. O. Le Meur, P. Le Callet and D. Barba, Predicting visual fixations on video based on low-level visual features, Vision Research, Vol. 47/19 pp 2483-2498, Sept. 2007. [pdf] [IF=2.05]

2. O. Le Meur, P. Le Callet and D. Barba, Construction d'images miniatures avec recadrage automatique basée sur un modèle perceptuel bio-inspiré, Numéro spécial de la Revue Traitement du Signal (TS), Systèmes de traitement et d'analyse des Images, Vol.24, N°5, pp. 323-336, 2007. [pdf]

1. O. Le Meur, P. Le Callet, D. Barba and D. Thoreau, A coherent computational approach to model the bottom-up visual attention, IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol. 28, N°5, May 2006. [pdf] [IF=4.3]

Conferences

2020

- F. Berton, L. Hoyet, A. H. Olivier, J. Bruneau, O. Le Meur, J. Pettré (2020, March). Eye-Gaze Activity in Crowds: Impact of Virtual Reality and Density. In 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (pp. 322-331). IEEE.

- O. Le Meur, P. A. Fons (2020, June). Predicting image influence on visual saliency distribution: the focal and ambient dichotomy. In ETRA.

2019

- M. Rousselot, X. Ducloux, O. Le Meur, R. Cozot, Quality metric aggregation for HDR/WCG images. International Conference on Image Processing (ICIP), Sep 2019, Taipei, Taiwan

- A. Bruckert, Y. H. Lam, M. Christie, O. Le Meur, Deep learning for inter-observer congruency prediction. ICIP 2019 – IEEE International Conference on Image Processing, Sep 2019, Taipei, Taiwan. pp.3766-3770

- L. Maczyta, P. Bouthemy, O. Le Meur, Unsupervised motion saliency map estimation based on optical flow inpainting. ICIP 2019 – IEEE International Conference on Image Processing, Sep 2019, Taipei, Taiwan. pp.4469-4473

- A. Nebout, W. Wei, Z. Liu, L. Huang, O.Le Meur, Predicting saliency maps for ASD people. ICME Workshop, Jul 2019, Shanghai, China

- W. Wei, Z. Liu, L. Huang, A. Nebout, O. Le Meur, Saliency prediction via multi-level features and deep supervision for children with autism spectrum disorder. ICME Workshop, Jul 2019, Shanghai, China

2018

- B. John, P. Raiturkar, O. Le Meur and E. Jain, A Benchmark of Four Methods for Generating 360 Saliency Maps from Eye Tracking Data, IEEE AIVR.

- L. Maczyta, P. Bouthemy and O. Le Meur, Détection de la saillance dynamqiue dans des vidéos par apprentissage profond, RFIAP, 2018.

- M. Rousselot, E. Auffret, X. Ducloux, R. Cozot, and O. Le Meur, Adapting HDR images using uniform color space for SDR quality metrics, CORESA, 2018.

- M. Rousselot, E. Auffret, X. Ducloux, R. Cozot and O. Le Meur, Impacts of Viewing Conditions on HDR-VDP2, EUSIPCO, 2018.

- T. Zhang and O. Le Meur, How old do you look? Inferring your age from your gaze, ICIP, 2018.

- K. Bannier, E. Jain and O. Le Meur, DeepComics: saliency estimation for comics, ETRA, 2018. [Here]

- D. Kuzovkin, T. Pouli, R. Cozot, O. Le Meur, J. Kervec and K. Bouatouch, Image Selection in Photo Albums, ICMR, 2018.

- H. Hristova, O. Le Meur, R. Cozot and K. Bouatouch, Transformation of the Beta distribution for color transfer methods, full paper, GRAPP is part of VISIGRAPP, the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, 2018. [pdf]

- A. Helo, O. Le Meur, A. Coutrot, Z. Liu, P. Rämä and A. Le Roch, Age-dependent saccadic models, ICIS Meeting in Philadelphia, 2018.

2017

- T. Maugey, O. Le Meur, and Z. Liu, Saliency-based navigation in omnidirectional image, IEEE 19th International Workshop on Multimedia Signal Processing, 2017. [Project page]

- O. Le Meur, A. Coutrot, Z. Liu, P. Rämä, A. Le Roch, and A. Helo, Your Gaze Betrays Your Age, EUSIPCO, 2017. [pdf] [Presentation]

- D. Kuzovkin, T. Pouli, R. Cozot, O. Le Meur, J. Kervec and K. Bouatouch, Context-aware Clustering and Assessment of Photo Collections, Expressive, 2017.

- O. Le Meur, A. Coutrot, A. Le Roch, A. Helo, P. Rämä and Z. Liu, Age-dependent sacadic models for predicting eye movements, ICIP, 2017.

- H. Hristova, O. Le Meur, R. Cozot and K. Bouatouch, Perceptual metric for color transfer methods, ICIP 2017. [Project page] [SOFT]

- H. Hristova, O. Le Meur, R. Cozot and K. Bouatouch, High-Dynamic-Range Image Recovery from Flash and Non-Flash Image Pairs, CGI (Computer Graphics International 2017) 2017. BEST PAPER AWARD

2016

- A. Coutrot and O. Le Meur, Visual attention saccadic models: taking into account global scene context and temporal aspects of gaze behaviour., ECVP, 2016. [pdf]

- O. Le Meur and A. Coutrot, How saccadic models help predict where we look during a visual task? Application to visual quality assessment., SPIE Electronic Imaging, Image Quality and System Performance XIII, 2016.

- P. Buyssens, O. Le Meur, M. Daisy, D. Tschumperlé, Olivier Lézoray, Désoccultation de cartes de profondeurs pour la synthèse de vues virtuelles. RFIA 2016, Jun 2016, Clermont-Ferrand, France. HAL.

2015

- N. Dhollande, X. Ducloux, O. Le Meur and C. Guillemot, Fast block partitioning method in HEVC Intra coding for UHD video, IEEE ICCE, 2015. [Presentation pptx]

- H. Hristova, O. Le Meur, R. Cozot and K. Bouatouch, Style-aware robust color transfer, Expressive 2015 (International Symposium on Computational Aesthetics in Graphics, Visualization, and Imaging). [Presentation (slideShare)],[H. Hristova's webpage]

- H. Hristova, O. Le Meur, R. Cozot and K. Bouatouch, Color transfer between high-dynamic-range images, SPIE Optical Engineering + Applications, Applications of Digital Image Processing XXXVIII, 2015.[Presentation (slideShare)],[pdf]

- D. Khaustova, J. Fournier, E. Wyckens and O. Le Meur, An objective method for 3D quality prediction using perceptual thresholds and acceptability, SPIE Stereoscopic Displays and Applications XXVI, vol. 90140D, 2015.

2014

- O. Le Meur and Z. Liu, Saliency aggregation: Does unity make strength?, ACCV, 2014. [pdf] [poster][Acceptance Rate=27%]

- L. Li, Z. Liu, W. Zou, X. Zhang and O. Le Meur, Co-saliency detection based on region-level fusion and pixel-level refinement, ICME, 2014

- N. Dhollande, O. Le Meur and C. Guillemot, HEVC intra coding of Ultra HD video with reduced complexity, ICIP, 2014

- J.C. Ferreira, O. Le Meur, E.A.B. da Silva, G.A. Carrijo and C. Guillemot,Single image super-resolution using sparse representations with structure constraints, ICIP, 2014 [Project page]

- D. Khaustova, J. Fournier, E. Wyckens and O. Le Meur, Investigation of visual attention priority in selection of objects with texture, crossed, and uncrossed disparities in 3D images, HVEI, 2014 [pdf] [presentation]

2013

- D. Wolinski, O. Le Meur and J. Gautier, 3D view synthesis with inter-view consistency, ACM Multimedia 2013. [pdf] [Project page].

- Z. Liu and O. Le Meur, Superpixel-based saliency detection, WIAMIS 2013, [pdf].

- S. Luo, Z. Liu, L. Li, X. Zou and O. Le Meur, Efficient saliency detection using regional color and spatial information, EUVIP, 2013 [pdf].

- M. Mancas and O. Le Meur, Memorability of natural scene: the role of attention, ICIP 2013 [Project page].

- C. Guillemot, M. Turkan, O. Le Meur and M. Ebdelli, Image inpainting using LLE-LDNR and linear subspace mappings, ICASSP 2013.

- M. Ebdelli, O. Le Meur and C. Guillemot, Analysis of patch-based similarity metrics: application to denoising, ICASSP 2013.

- D. Khaustova, J. Fournier, E. Wyckens and O. Le Meur, How visual attention is modified by disparities and textures changes?, SPIE HVEI 2013. [Acceptance Rate=xx%] [pdf] [presentation] [Project page].

2012

- O. Le Meur and C. Guillemot, Super-resolution-based inpainting, ECCV 2012. [Acceptance Rate=25%]

[pdf]

[poster]

[Masks and Pictures]

[PPT version] - M. Ebdelli, O. Le Meur and C. Guillemot, Loss Concealment Based on Video Inpainting for Robust Video Communication, EUSIPCO 2012. [Acceptance Rate=xx%] [pdf] [presentation] With video examples.

- M. Ebdelli, C. Guillemot and O. Le Meur, Examplar-based video inpainting with motion-compensated neighbor embedding, ICIP 2012. [Acceptance Rate=xx%] [pdf]

- J. Gautier, O. Le Meur and C. Guillemot, Efficient Depth Map Compression based on Lossless Edge Coding and Diffusion, PCS 2012. [pdf] [poster]

2011

- O. Le Meur, T. Baccino and A. Roumy, Prediction of the Inter-Observer Visual Congruency (IOVC) and application to image ranking, ACM Multimedia (long paper) 2011. [Acceptance Rate=17%] [pdf] [presentation] [poster] [Project page]

- B. Follet, O. Le Meur and T. Baccino, Features of ambient and focal fixations on natural visual scenes, ECEM 2011. [pdf]

- O. Le Meur, Robustness and Repeatability of saliency models subjected to visual degradations, ICIP 2011. [pdf].[pdf poster].

- O. Le Meur, J. Gautier and C. Guillemot, Examplar-based inpainting based on local geometry, ICIP 2011. [Project page] [pdf].[pdf poster].

- J. Gautier, O. Le Meur and C. Guillemot, Depth-based image completion for View Synthesis, 3DTV Conf. 2011. [Acceptance Rate=??%] [pdf] [Project page]

- O. Le Meur, Predicting saliency using two contextual priors: the dominant depth and the horizon line, IEEE International Conference on Multimedia & Expo (ICME 2011) 2011 [Acceptance Rate=30%] [pdf] [poster] [Project page]

2010

- C. Chamaret, O. Le Meur and J.C. Chevet, Spatio-temporal combination of saliency maps and eye-tracking assessment of different strategies, ICIP, pp. 1077-1080, 2010 [Acceptance Rate=47%] [pdf].[pdf poster].

- C. Chamaret, O. Le Meur, P. Guillotel and J.C. Chevet, How to measure the relevance of a retargeting approach?, Workshop Media retargeting, ECCV 2010. [pdf].[pdf presentation]. [Project page]

- C. Chamaret, S. Godeffroy, P. Lopez and O. Le Meur, Adaptive 3D Rendering based on Region-of-Interest, SPIE 2010 [pdf]

2009

- O. Le Meur and P. Le Callet, What we see is most likely to be what matters: visual attention and applications, ICIP, pp. 3085-3088, 2009 [pdf] [more here] [Acceptance Rate=45%]

2008

- C. Chamaret and O. Le Meur, Attention-based video reframing: validation using eye-tracking, ICPR, 2008. [pdf]

- A. Ninassi, O. Le Meur, P. Le Callet, D. Barba, Which Semi-Local Visual Masking Model For Wavelet Based Image Quality Metric?, ICIP, 2008. [pdf]

2007

- A. Ninassi, O. Le Meur, P. Le Callet, D. Barba, Does where you gaze on an image affect your perception of quality? Applying visual attention to image quality metric, ICIP, 2007. [pdf] [oral presentation]

2006

- A. Ninassi, O. Le Meur, P. Le Callet, D. Barba, Task impact on the visual attention in subjective image quality assessment, EUSIPCO, 2006. [pdf] [oral presentation]

- O. Le Meur, X. Castellan, P. Le Callet, D. Barba, Efficient saliency-based repurposing method, ICIP, 2006. [pdf] [poster]

before 2006

- O. Le Meur, P. Le Callet, D. Barba, D. Thoreau, A human visual model-based approach of the visual attention and performance evaluation , SPIE HVEI, 2005. [pdf].

- O. Le Meur, P. Le Callet, D. Barba, D. Thoreau, Modelisation spatio-temporelle de lattention visuelle, CORESA, 2005. [pdf].

- O. Le Meur, P. Le Callet, D. Barba, D. Thoreau, E. Francois, From low level perception to high level perception, a coherent approach for visual attention modeling, SPIE HVEI, 2004. [pdf].

- O. Le Meur, P. Le Callet, D. Barba, D. Thoreau, Performance assessment of a visual attention system entirely based on a human vision modelling , ICIP, 2004. [pdf] [poster].

- O. Le Meur, P. Le Callet, D. Barba, D. Thoreau, Bottom-up visual attention modeling : quantitative comparison of predicted saliency maps with observers eye-tracking data , ECVP, 2004. [poster].